In practice, it's unlikely that one hidden unit has an input of precisely 0, so it doesn't matter much whether you take 0 or 1 for gradient in that situation. E.g. Theano considers that the gradient at 0 is 0. Tensorflow's playground does the same:

public static RELU: ActivationFunction = {

output: x => Math.max(0, x),

der: x => x <= 0 ? 0 : 1

};

(1) did notice the theoretical issue of non-differentiability:

This paper

shows that rectifying neurons are an

even better model of biological neurons and

yield equal or better performance than hyperbolic

tangent networks in spite of the

hard non-linearity and non-differentiability

at zero, creating sparse representations with

true zeros, which seem remarkably suitable

for naturally sparse data.

but it works anyway.

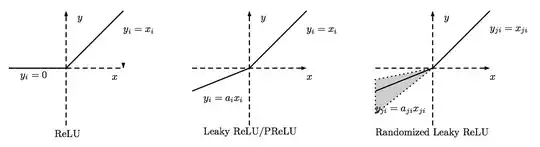

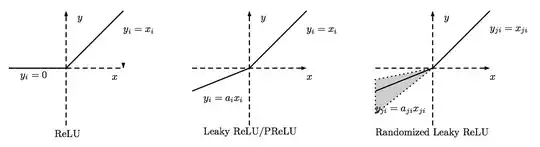

As a side note, if you use ReLU, you should watch for dead units in the network (= units that never activate). If you see to many dead units as you train your network, you might want to consider switching to leaky ReLU.