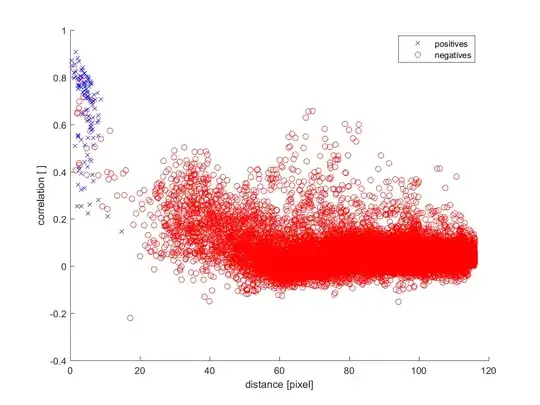

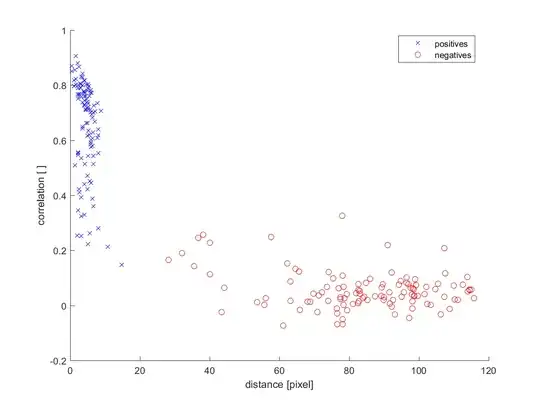

I have a highly imbalanced data set (ratio 1:150) with four predictors, where two are correlated. The data can be found here, you can also see the two figures below.

I would like to use logistic regression, and then validate it, in order to

- compare it with a different model,

- check which predictors can be omitted, and

- check if the performance can be improved by combining features (feat1, feat1*feat2, etc.).

I also wanted to do undersampling to reduce the computational effort (I want to use the classifier in live application).

My questions:

- Which measure should I use to check performance? There are too many (F-measure, Cohen's Kappa, Powers Informedness, AUC for ROC). I thought first about the AUC, because then I don't have to select a threshold like for the other measures. But there has been literature saying AUC is not a good measure. Is it better to use the sum of the error: (predicted label- classifier continuous output)^2? Then I also don't have to select a threshold.

- How would you reduce the computational effort? I thought about focused undersampling, instead of random undersampling, and keep class overlapping points. But I'm guessing this might lead to bias.

Figure 1. Two features plotted against each other for using full data:

Figure 2. Random undersampled data, leading to complete separation: