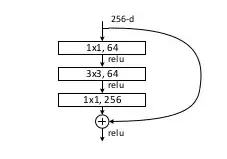

I am wondering about how 1x1 convolution can be used to change the dimensionality of feature maps in a

residual learning network.

Here the top 1x1 convolution changes the feature map size from 256 to 64. How is this possible?

In a previous post explaining 1x1 convolution in neural net, it is mentioned that, if a layer having $n_1$ feature maps is subjected to 1x1 convolution with $n_2$ filters then number of feature map changes to $n_2$. Shouldn't it be $n_1$$n_2$ since each of the $n_2$ filters produce one output corresponding to each of the $n_1$ inputs.

Also how does one generate 256 feature maps from 64, as done in the bottom layer.