From what I understand, you're supposed to rescale your activation layer after an application of dropout by an amount proportional to how much you dropped. Essentially truing up the lost relevance (poorly stated, but I hope I made myself clear). My question is whether this rescaling for lost weight is necessary or recommended after an application of drop connect on a matrix of weights?

Asked

Active

Viewed 184 times

1 Answers

2

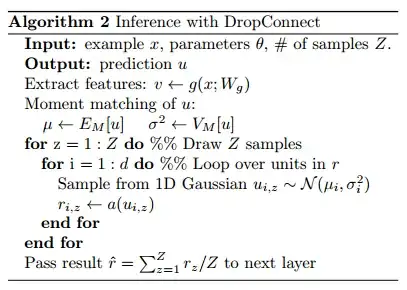

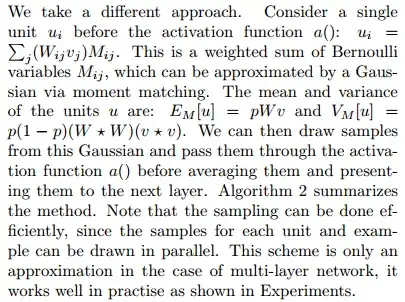

Unlike dropout, the inference of dropconnect is sort of a MC method according to the papper Sec 3.2.

It approximates the pre-activation values with Gaussian distributions, draws a number of samples from the Gaussians, and take the average of the activation values of the samples.

dontloo

- 13,692

- 7

- 51

- 80