We have a practical real-life problem in an open source Linux related project. And I would like to hear an expert review/opinion about the way we are trying to solve this problem. It's been more than 15 years since I graduated and my mathematics skills are a little bit rusty.

There are some electronic devices, which may work at different configurable clock frequencies. A valid clock frequency must be a multiple of 24 MHz. Different units of the same type may have slightly different reliability (one unit may be reliable at 720 MHz, but another may fail already at 672 MHz). There is also a tool, which can test whether the device is working reliable or not. The currently collected statistics from 23 different units is presented in a table [1]. Obtaining more test samples is difficult. Even these 23 samples took a lot of time to get collected. Here is the list of the clock frequencies, at which the devices have been confirmed to fail the reliability test:

4 units : PASS <= 648 MHz, FAIL >= 672 MHz (clock frequency bin #1)

10 units : PASS <= 672 MHz, FAIL >= 696 MHz (clock frequency bin #2)

4 units : PASS <= 696 MHz, FAIL >= 720 MHz (clock frequency bin #3)

4 units : PASS <= 720 MHz, FAIL >= 744 MHz (clock frequency bin #4)

1 unit : PASS <= 744 MHz, FAIL >= 768 MHz (clock frequency bin #5)

The transition points from the PASS to the FAIL state can be assumed to be somewhere in the middle of each 24 MHz interval and the whole set of clock frequency samples may look like this:

x = [660, 660, 660, 660, 684, 684, 684, 684, 684, 684, 684, 684, 684, 684, 708, 708, 708, 708, 732, 732, 732, 732, 756]

Overall the distribution somewhat resembles the normal distribution by just looking at the histogram. But it's best to verify this. I tried a few normality tests, such as Shapiro-Wilk, Kolmogorov-Smirnov and Anderson-Darling. However they need more samples than are available. In addition, the normality tests don't like ties, and we are essentially dealing with a binned data (using the 24MHz intervals).

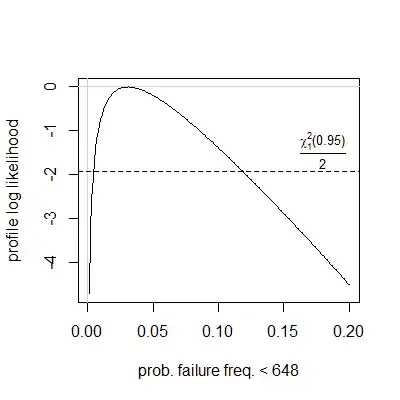

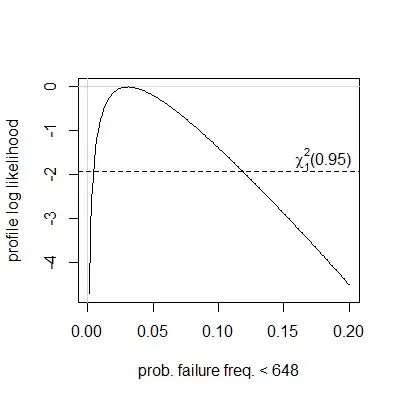

So instead of doing the standard normality tests mentioned above, I tried to approximate the experimental data as a normal distribution and then do an exact multinomial test using the Xnomial library for R. It checks if the theoretical probabilities for each of the available frequency bins agrees with the experimental data. More specifically, we calculate the sample mean ($695.478261$) and sample variance ($26.949228^2$) of this data set. Then using the CDF of the normal distribution with these mean and variance parameters, we get theoretical probabilities for each bin. For example $\Phi(696) - \Phi(672)$ should give us the probability of having a device that passes the reliability test at 672 MHz but fails it at 696 MHz. Because each device belongs to some frequency bin and each frequency bin has its own theoretical probability, this whole setup is nothing else but a perfect example of a multinomial distribution. And we can do a goodness-of-fit test by comparing the theoretical probabilities with the actual numbers of observed samples. If the p-value looks ok, then our null hypothesis about having a normal distribution is not rejected yet. Does this approach look reasonable?

And at the end of the day we want to pick a single clock frequency, which is reasonably reliable on all devices of this type. If we do have a normal distribution here, then the probability of having reliability problems at 648MHz is estimated to be ~3.9% and the probability of having problems at 624MHz is estimated to be ~0.4% (though there were no devices failing at 624 MHz or 648 MHz in the current sample set of 23 devices). But if we don't have a normal distribution, then we have to resort to something like Chebyshev's inequality, which is very conservative and overestimates probabilities. Since we have a one-tailed case (we are only looking at the low clock frequencies), the lower semivariance Chebyshev's inequality seemed to be the most appropriate. But it gives ~12.8% as the failure probability upper bound for 648 MHz and ~5.7% for 624 MHz.

Are there any obvious problems? Can anything be done better? We also have the results of processing these 23 samples presented as another table [2] for your convenience.

Thanks!