@firebug is right that you shuffle your data, so folds have different composition.

However, if the models are stable (i.e. the same model parameters result irrespecitve of the small changes in the training data due to inluding/excluding a few cases), you still get the same prediction for the same test case. If, on the other hand, your models are sensitive to the small changes in the training data between the different folds (i.e. unstable), then you'll have different predictions across the runs for the same test case.

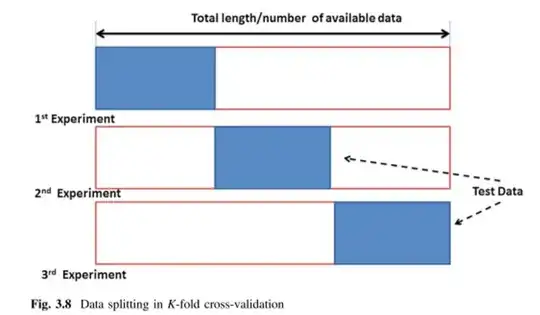

Repeated cross validation allows you to measure this aspect of model stability.