This question by caveman is popular, but there were no attempted answers for months until my controversial one. It may be that the actual answer below is not, in itself, controversial, merely that the questions are "loaded" questions, because the field seems (to me, at least) to be populated by acolytes of AIC and BIC who would rather use OLS than each others' methods. Please look at all the assumptions listed, and restrictions placed on data types and methods of analysis, and please comment on them; fix this, contribute. Thus far, some very smart people have contributed, so slow progress is being made. I acknowledge contributions by Richard Hardy and GeoMatt22, kind words from Antoni Parellada, and valiant attempts by Cagdas Ozgenc and Ben Ogorek to relate K-L divergence to an actual divergence.

Before we begin let us review what AIC is, and one source for this is Prerequisites for AIC model comparison and another is from Rob J Hyndman. In specific, AIC is calculated to be equal to

$$2k - 2 \log(L(\theta))\,,$$

where $k$ is the number of parameters in the model and $L(\theta)$ the likelihood function. AIC compares the trade-off between variance ($2k$) and bias ($2\log(L(\theta))$) from modelling assumptions. From Facts and fallacies of the AIC, point 3 "The AIC does not assume the residuals are Gaussian. It is just that the Gaussian likelihood is most frequently used. But if you want to use some other distribution, go ahead." The AIC is the penalized likelihood, whichever likelihood you choose to use. For example, to resolve AIC for Student's-t distributed residuals, we could use the maximum-likelihood solution for Student's-t. The log-likelihood usually applied for AIC is derived from Gaussian log-likelihood and given by

$$ \log(L(\theta)) =-\frac{|D|}{2}\log(2\pi) -\frac{1}{2} \log(|K|) -\frac{1}{2}(x-\mu)^T K^{-1} (x-\mu), $$

$K$ being the covariance structure of the model, $|D|$ the sample size; the number of observations in the datasets, $\mu$ the mean response and $x$ the dependent variable. Note that, strictly speaking, it is unnecessary for AIC to correct for the sample size, because AIC is not used to compare datasets, only models using the same dataset. Thus, we do not have to investigate whether the sample size correction is done correctly or not, but we would have to worry about this if we could somehow generalize AIC to be useful between datasets. Similarly, much is made about $K>>|D|>2$ to insure asymptotic efficiency. A minimalist view might consider AIC to be just an "index," making $K>|D|$ relevant and $K>>|D|$ irrelevant. However, some attention has been given to this in the form of proposing an altered AIC for $K$ not much larger than $|D|$ called AIC$_c$ see second paragraph of answer to Q2 below. This proliferation of "measures" only reinforces the notion that AIC is an index. However, caution is advised when using the "i" word as some AIC advocates equate use of the word "index" with the same fondness as might be attached to referring to their ontogeny as extramarital.

Q1: But a question is: why should we care about this specific fitness-simplicity trade-off?

Answer in two parts. First the specific question. You should only care because that was the way it was defined. If you prefer there is no reason not to define a CIC; a caveman information criterion, it will not be AIC, but CIC would produce the same answers as AIC, it does not effect the tradeoff between goodness-of-fit and positing simplicity. Any constant that could have been used as an AIC multiplier, including one times, would have to have been chosen and adhered to, as there is no reference standard to enforce an absolute scale. However, adhering to a standard definition is not arbitrary in the sense that there is room for one and only one definition, or "convention," for a quantity, like AIC, that is defined only on a relative scale. Also see AIC assumption #3, below.

The second answer to this question pertains to the specifics of AIC tradeoff between goodness-of-fit and positing simplicity irrespective of how its constant multiplier would have been chosen. That is, what actually effects the "tradeoff"? One of the things that effects this, is to degree of freedom readjust for the number of parameters in a model, this led to defining an "new" AIC called AIC$_c$ as follows:

$$\begin{align}AIC_c &= AIC + \frac{2k(k + 1)}{n - k - 1}\\

&= \frac{2kn}{n-k-1} - 2 \ln{(L)}\end{align} \,,$$

where $n$ is the sample size. Since the weighting is now slightly different when comparing models having different numbers of parameters, AIC$_c$ selects models differently than AIC itself, and identically as AIC when the two models are different but have the same number of parameters.

Other methods will also select models differently, for example, "The BIC [sic, Bayesian information criterion] generally penalizes free parameters more strongly than the Akaike information criterion, though it depends..." ANOVA would also penalize supernumerary parameters using partial probabilities of the indispensability of parameter values differently, and in some circumstances would be preferable to AIC use. In general, any method of assessment of appropriateness of a model will have its advantages and disadvantages. My advice would be to test the performance of any model selection method for its application to the data regression methodology more vigorously than testing the models themselves. Any reason to doubt? Yup, care should be taken when constructing or selecting any model test to select methods that are methodologically appropriate. AIC is useful for a subset of model evaluations, for that see Q3, next. For example, extracting information with model A may be best performed with regression method 1, and for model B with regression method 2, where model B and method 2 sometimes yields non-physical answers, and where neither regression method is MLR, where the residuals are a multi-period waveform with two distinct frequencies for either model and the reviewer asks "Why don't you calculate AIC?"

Q3 How does this relate to information theory:

MLR assumption #1. AIC is predicated upon the assumptions of maximum likelihood (MLR) applicability to a regression problem. There is only one circumstance in which ordinary least squares regression and maximum likelihood regression have been pointed out to me as being the same. That would be when the residuals from ordinary least squares (OLS) linear regression are normally distributed, and MLR has a Gaussian loss function. In other cases of OLS linear regression, for nonlinear OLS regression, and non-Gaussian loss functions, MLR and OLS may differ. There are many other regression targets than OLS or MLR or even goodness of fit and frequently a good answer has little to do with either, e.g., for most inverse problems. There are highly cited attempts (e.g., 1100 times) to use generalize AIC for quasi-likelihood so that the dependence on maximum likelihood regression is relaxed to admit more general loss functions. Moreover, MLR for Student's-t, although not in closed form, is robustly convergent. Since Student-t residual distributions are both more common and more general than, as well as inclusive of, Gaussian conditions, I see no special reason to use the Gaussian assumption for AIC.

MLR assumption #2. MLR is an attempt to quantify goodness of fit. It is sometimes applied when it is not appropriate. For example, for trimmed range data, when the model used is not trimmed. Goodness-of-fit is all fine and good if we have complete information coverage. In time series, we do not usually have fast enough information to understand fully what physical events transpire initially or our models may not be complete enough to examine very early data. Even more troubling is that one often cannot test goodness-of-fit at very late times, for lack of data. Thus, goodness-of-fit may only be modelling 30% of the area fit under the curve, and in that case, we are judging an extrapolated model on the basis of where the data is, and we are not examining what that means. In order to extrapolate, we need to look not only at the goodness of fit of 'amounts' but also the derivatives of those amounts failing which we have no "goodness" of extrapolation. Thus, fit techniques like B-splines find use because they can more smoothly predict what the data is when the derivatives are fit, or alternatively inverse problem treatments, e.g., ill-posed integral treatment over the whole model range, like error propagation adaptive Tikhonov regularization.

Another complicated concern, the data can tell us what we should be doing with it. What we need for goodness-of-fit (when appropriate), is to have the residuals that are distances in the sense that a standard deviation is a distance. That is, goodness-of-fit would not make much sense if a residual that is twice as long as a single standard deviation were not also of length two standard deviations. Selection of data transforms should be investigated prior to applying any model selection/regression method. If the data has proportional type error, typically taking the logarithm before selecting a regression is not inappropriate, as it then transforms standard deviations into distances. Alternatively, we can alter the norm to be minimized to accommodate fitting proportional data. The same would apply for Poisson error structure, we can either take the square root of the data to normalize the error, or alter our norm for fitting. There are problems that are much more complicated or even intractable if we cannot alter the norm for fitting, e.g., Poisson counting statistics from nuclear decay when the radionuclide decay introduces an exponential time-based association between the counting data and the actual mass that would have been emanating those counts had there been no decay. Why? If we decay back-correct the count rates, we no longer have Poisson statistics, and residuals (or errors) from the square-root of corrected counts are no longer distances. If we then want to perform a goodness-of-fit test of decay corrected data (e.g., AIC), we would have to do it in some way that is unknown to my humble self. Open question to the readership, if we insist on using MLR, can we alter its norm to account for the error type of the data (desirable), or must we always transform the data to allow MLR usage (not as useful)? Note, AIC does not compare regression methods for a single model, it compares different models for the same regression method.

AIC assumption #1. It would seem that MLR is not restricted to normal residuals, for example, see this question about MLR and Student's-t. Next, let us assume that MLR is appropriate to our problem so that we track its use for comparing AIC values in theory. Next we assume that have 1) complete information, 2) the same type of distribution of residuals (e.g., both normal, both Student's-t) for at least 2 models. That is, we have an accident that two models should now have the type of distribution of residuals. Could that happen? Yes, probably, but certainly not always.

AIC assumption #2. AIC relates the negative logarithm of the quantity (number of parameters in the model divided by the Kullback-Leibler divergence). Is this assumption necessary? In the general loss functions paper a different "divergence" is used. This leads us to question if that other measure is more general than K-L divergence, why are we not using it for AIC as well?

The mismatched information for AIC from Kullback-Leibler divergence is "Although ... often intuited as a way of measuring the distance between probability distributions, the Kullback–Leibler divergence is not a true metric." We shall see why shortly.

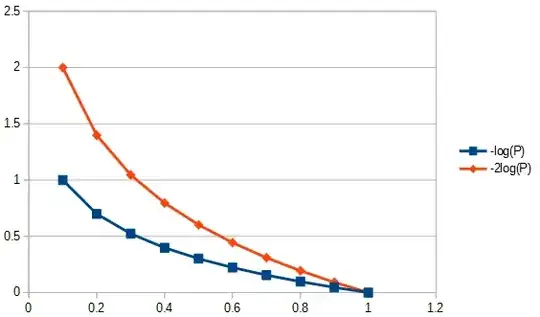

The K-L argument gets to the point where the difference between two things the model (P) and the data (Q) are

$$D_{\mathrm{KL}}(P\|Q) = \int_X \log\!\left(\frac{{\rm d}P}{{\rm d}Q}\right) \frac{{\rm d}P}{{\rm d}Q} \, {\rm d}Q \,,$$

which we recognize as the entropy of ''P'' relative to ''Q''.

AIC assumption #3. Most formulas involving the Kullback–Leibler divergence hold regardless of the base of the logarithm. The constant multiplier might have more meaning if AIC were relating more than one data set at at time. As it stands when comparing methods, if $AIC_{data,model 1}<AIC_{data,model 2}$ then any positive number times that will still be $<$. Since it is arbitrary, setting the constant to a specific value as a matter of definition is also not inappropriate.

AIC assumption #4. That would be that AIC measures Shannon entropy or self information." What we need to know is "Is entropy what we need for a metric of information?"

To understand what "self-information" is, it behooves us to normalize information in a physical context, any one will do. Yes, I want a measure of information to have properties that are physical. So what would that look like in a more general context?

The Gibbs free-energy equation ($\Delta G = ΔH – TΔS$) relates the change in energy to the change in enthalpy minus the absolute temperature times the change in entropy. Temperature is an example of a successful type of normalized information content, because if one hot and one cold brick are placed in contact with each other in a thermally closed environment, then heat will flow between them. Now, if we jump at this without thinking too hard, we say that heat is the information. But is it the relative information that predicts behaviour of a system. Information flows until equilibrium is reached, but equilibrium of what? Temperature, that's what, not heat as in particle velocity of certain particle masses, I am not talking about molecular temperature, I am talking about gross temperature of two bricks which may have different masses, made of different materials, having different densities etc., and none of that do I have to know, all I need to know is that the gross temperature is what equilibrates. Thus if one brick is hotter, then it has more relative information content, and when colder, less.

Now, if I am told one brick has more entropy than the other, so what? That, by itself, will not predict if it will gain or lose entropy when placed in contact with another brick. So, is entropy alone a useful measure of information? Yes, but only if we are comparing the same brick to itself thus the term "self-information."

From that comes the last restriction: To use K-L divergence all bricks must be identical. Thus, what makes AIC an atypical index is that it is not portable between data sets (e.g., different bricks), which is not an especially desirable property that might be addressed by normalizing information content. Is K-L divergence linear? Maybe yes, maybe no. However, that does not matter, we do not need to assume linearity to use AIC, and, for example, entropy itself I do not think is linearly related to temperature. In other words, we do not need a linear metric to use entropy calculations.

One good source of information on AIC is in this thesis. On the pessimistic side this says, "In itself, the value of the AIC for a given data set has no meaning." On the optimistic side this says, that models that have close results can be differentiated by smoothing to establish confidence intervals, and much much more.