Original Question

Let $N(t)$ be a Poisson process with intensity $\lambda$. Let $T_1<T_2<...$ be the occurrence times. Let $T_0=0$. For any $t>0$, define the $age$ random variable to be

$A_t := t-T_{N(t)} $

the $residual$ $life$ $time$ random variable to be

$R_t:=T_{N(t)+1}-t$

In other words, $age$ is the time elapsed since the last occurrence and $residual$ $life$ $time$ is the time remaining until the next occurrence.

Using a similar logic to the first answer of Relationship between poisson and exponential distribution, one can show that $A_t\sim$ Exp($\lambda$). Below is the reproduction of the logic:

$A_t>a \Leftrightarrow T_{N(t)}<T_{N(t)}+a<t \Leftrightarrow N(T_{N(t)})=N(T_{N(t)}+a)=N(t)$

I understand that the second $\Leftarrow$ logic requires the last equation to ALWAYS hold.

Continue on with user28's proof

$P(A_t>a)=P[N(T_{N(t)}+a)-N(T_{N(t)})=0]=P[N(a)=0]=e^{-\lambda a}$

However if I admit that $A_t\sim$ Exp($\lambda$), I will quickly run into the following paradox:

Since the exponential distribution is memoryless, one can easily prove that $R_t\sim$ Exp($\lambda$) and is independent of $A_t$. This means that two independent Exp($\lambda$) exponential random variables $A_t$ and $R_t$ sum to a different Exp($\lambda$) exponential random variable -- the interarrival time of the Poisson process.

According to Wikipedia https://en.wikipedia.org/wiki/Exponential_distribution, the sum, however, should be Gamma(2,$\lambda$) (in the ($\alpha,\beta$) parameterization).

What did I do wrong here? Was I too ambitious claiming $T_{N(t)}<T_{N(t)}+a<t \Leftrightarrow N(T_{N(t)})=N(T_{N(t)}+a)=N(t)$ ?

If the $\Leftarrow$ can not be claimed, then I can only get $P(A_t>a)>e^{-\lambda a}$. How should I get the distribution for $A_t$ in this case?

First Edit

I take the Poisson process as a limiting process of the Bernoulli process and arrive at the same conclusion $A_t\sim$ Exp($\lambda$). The key steps are as follows:

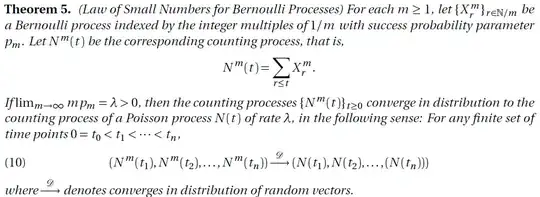

Step 1: Review the Law of Small Numbers for Bernoulli processes

Step 2: Let $t\in\mathbb{N}$ for the Bernoulli process $\{X_r^m\}_{r\in\mathbb{N}/m}$. Then by time $t$, there are a total of $mt$ trials, and $N^m(t)$ of them are successes. For $N^m(t)>0$, the time that the last success takes place, $T_{N^m(t)}$, takes any value between $\frac{1}{m},\frac{2}{m},...,t$. Let $k\in\mathbb{N}$ such that $0<k<mt$

$P(t-T_{N^m(t)}=\frac{k}{m})$

$=P($ the last $k+1$ trials of the total $mt$ trials are one success and $k$ failures $)$

$=p_m(1-p_m)^k$

When $k=mt$, $P(t-T_{N^m(t)}=\frac{k}{m})=$$P($ all $mt$ trials are failures $)=(1-p_m)^{mt}$

Therefore

\begin{split} P \Big(t-T_{N^m(t)}>\frac{k}{m} \Big) &= \sum_{i=k+1}^{mt-1}P\Big(t-T_{N^m(t)}=\frac{i}{m}\Big) + P\Big(t-T_{N^m(t)}=\frac{mt}{m}\Big) \\ & = p_m(1-p_m)^{k+1} + ... + p_m(1-p_m)^{mt-1} + (1-p_m)^{mt} \\ & = (1-p_m)^{k+1} \end{split}

Taking $x=\frac{k}{m}$ and the limit $\lim_{m\to\infty} mp_m = \lambda$ yields \begin{split} P (t-T_{N^m(t)}>x) = \Big(1-\frac{\lambda}{m}\Big)^{mx+1} \to e^{-\lambda x} \end{split}

This again leads to the conclusion that $A_t\sim$ Exp($\lambda$) and the same paradox in the original question.