I see many descriptions of splitting the data set into a training part, a validation part and a test part. We train our models on the training part and choose the best model using the validation part, finally seeing how the best model performs on the test set. We choose the best model in the validation part using some kind of test statistic, say MSE. But what do we do when the MSE for say two models are really close? From the law of parsimony I might want to choose the most parsimonious model (of, say, two competing models) even though the MSE for the parsimonious model is a bit higher. I propose here a model selection method:

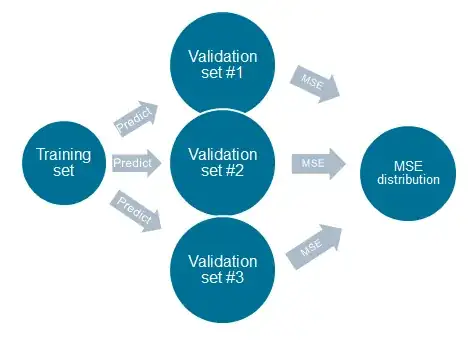

Algorithm would be like this:

1) Train your models on the training set

2) Sample with replacement the validation set into K validation sets

3) Predict using your models on each validation set

4) Calculate the MSE/MSPE for all the models on the K validation sets

4) Calculate the MSE/MSPE distributions from your K MSE/MSPE-calculations

In a scenario where the MSE/MSPE distributions of two competing models are more or less overlapping, I would choose the most parsimonious model. It would basically be a test of $H_0: \text{Predicative capabilities of parsimonious model equals complex model}$.

If the mean of $\text{MSE}_1$ is well within the 95th percentile of distribution of $\text{MSE}_2$, we choose the most parsimonious model of the two, regardless of which model has the lowest MSE.

Question: Does this method make sense to anybody else but me? Also, is this described anywhere else in the statistical litterature?

EDIT: It might seem like a similar question is asked here