Your analysis results essentially answer your question. Much is from the smaller number of cases, but the lack of normally distributed residuals means that the p-values are not reliable, and there is evidence that your model is not yet specified well enough.

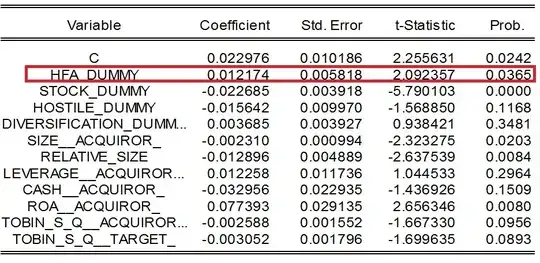

The point estimate of your coefficient of interest is the same to two significant figures in both the full and the post-2007 subset: 0.012. Its standard error is higher in the subset, so the t-statistic is lower in magnitude and is no longer "significant." You will notice that most other coefficients also have larger standard errors in the subset.

So why are the standard errors higher? The formula for the standard error of a coefficient depends on the number of cases. Same coefficient value, fewer cases, higher standard errors, no longer "significant."

In any event, the lack of normality in your residuals calls into serious question the validity of the statistical tests. You might still have reasonable point estimates of the coefficients, but without normally distributed residuals independent in magnitude from the predicted values you can't trust the p-values at all.

Next, look carefully at all of the coefficients. You will notice that the "Stock_Dummy" and "Relative_Size" coefficients depart even more dramatically from "significance" in the post-2007 subset. This suggests that there may be some fundamental difference between the entire dataset and its post-2007 subset that your model does not capture. Think about what happened to the world economy in 2008.

Work on refining your model to get better-behaved residuals and (possibly) to account for the different economic world since 2007. It's not clear that you will then get "significance" for your coefficient of interest in the post-2007 subset, but if you are building a predictive model then it can be a good idea to include all relevant predictors even if they do not meet some arbitrary cutoff of "statistical significance."