This Exercise is particularly important to me because so far I believe to have a rather poor understanding on how to compute the joint probability distribution.

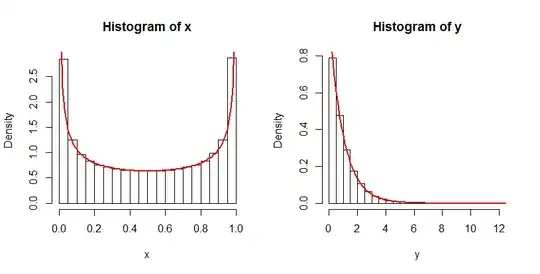

Problem: Let $X$ be a RV with density $f(x)= \frac{1}{\pi\sqrt{x(1-x)}}$ for $x \in (0,1)$ and $Y$ be a RV with Exponential Distribution (standard, parameter 1). Assuming that $X,Y$ are independent I am supposed to find the joint distribution of $U=XY, \ V=(1-X)Y$

My approach: Although I couldn't rigorously proof it I think I can state that $(X,Y)$ has joint probability density given by $m(x,y)= \frac{1}{\pi \sqrt{x(1-x)}}e^{-y}$ for $(x,y) \in (0,1) \times (0, \infty)$

My idea was now to compute for an arbitrary bounded continuous $g:\mathbb{R^2} \to \mathbb{R}$ $$E(g(U,V)) = \int_{\mathbb{R^2}}g(u,v) h(u,v)dudv $$ and hope that I can find a density function $h$.

$$E(g(U,V))=E(g(XY,(1-X)Y)) \\ \overset{3)}= \int_{\mathbb{R}^2}g(xy,(1-x)y) \frac{1}{\pi \sqrt{x(1-x)}}e^{-y}1_{(x,y) \in (0,1) \times (0 , \infty)} dx dy \\ = \int_{(0,1) \times (0, \infty)} g(xy,(1-x)y) \frac{1}{\pi \sqrt{x(1-x)}}e^{-y} dx dy =:I$$

Choosing the obvious transformation/substitution $(u,v)=(xy,(1-x)y)$ I get $(u,v) \in (0, \infty) \times (0, \infty)$ and $x= \frac{u}{u+v},y=u+v$ for the Jacobi Matrix I obtain $$J= \begin{pmatrix} \frac{v}{(u+v)^2} & \frac{-u}{(u+v)^2} \\ 1 & 1 \end{pmatrix} \implies |\det J| = \frac{1}{u+v}>0 $$ So finally I would obtain for the above integral denoted as $I$ that $$I= \int_{(0, \infty)^2} g(u,v) \underbrace{\frac{1}{\pi(\sqrt{\frac{u}{u+v}(1-\frac{u}{u+v})}}e^{-(u+v)} \frac{1}{u+v}}dudv \\ = \int_{(0, \infty)^2} g(u.v) \frac{1}{\pi \sqrt{uv}} e^{-(u+v)}dudv$$

Questions: 1) The obvious question of course if the above is correct or not

2) Do I need to do anything more? Or just state that the distribution of $(U,V)$ is given by the strange underbraced term on the last integral?

3) Given the density function of $(X,Y)$ (assuming my formula in the first paragraph is correct) why is this equation true? Intuitively I don't see why this should hold given the more standard formula $$E(f(X))= \int_{\mathbb{R}^d} f(x) P_X(dx)$$

Additional Question (optional): I am very new to this topic and have little to no to experience, please if you know of a more elegant way to approach the solution I would gladly know about it.