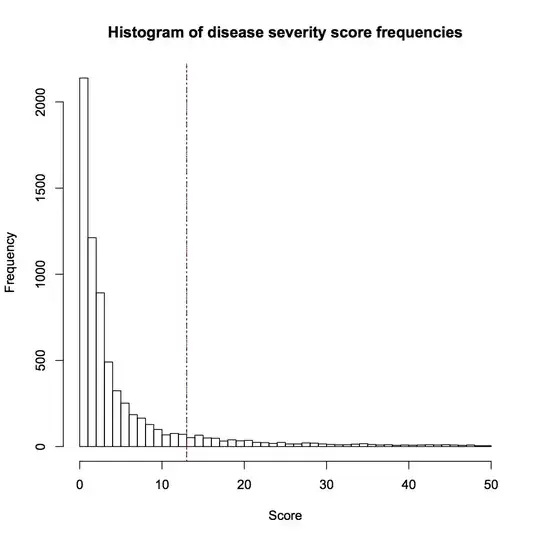

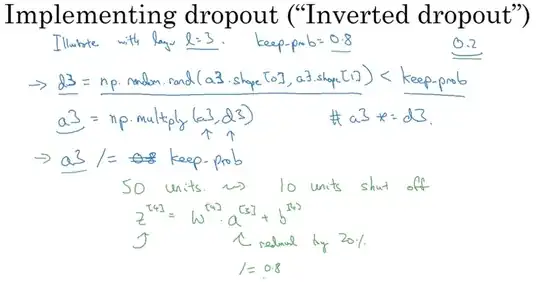

When applying dropout in artificial neural networks, one needs to compensate for the fact that at training time a portion of the neurons were deactivated. To do so, there exist two common strategies:

- scaling the activation at test time

- inverting the dropout during the training phase

The two strategies are summarized in the slides below, taken from Standford CS231n: Convolutional Neural Networks for Visual Recognition.

Which strategy is preferable, and why?

Scaling the activation at test time:

Inverting the dropout during the training phase:

$0.2 -> 5 -> 1.25$

$0.5 -> 2 -> 2$

$0.8 -> 1.25 -> 5$

– Ken Chan Apr 25 '17 at 05:41