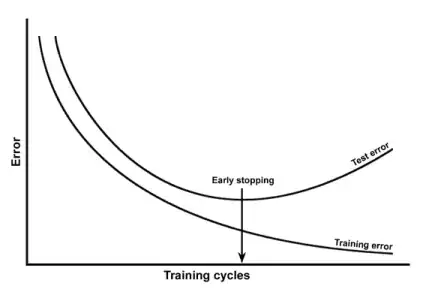

I understand that dropout is used to reduce over fitting in the network. This is a generalization technique.

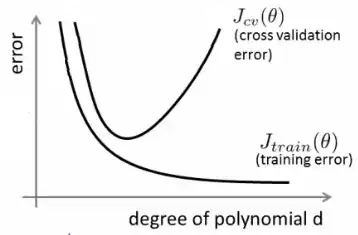

In convolutional neural network how can I identify overfitting?

One situation that I can think of is when I get training accuracy too high compared to testing or validation accuracy. In that case the model tries to overfit to the training samples and performs poorly to the testing samples.

Is this the only way that indicates whether to apply dropout or should dropout be blindly added to the model hoping that it increases testing or validation accuracy