I am trying to compare two one-dimensional distributions. I am using Kullback-Leibler divergence function for this but it requires me to have both the distributions of equal length. I am not sure how I can make the distributions of equal length without disturbing the original distributions(i.e. if I add zeroes to the distribution smaller in size, the probability of value 0 in that distribution will become very high.)

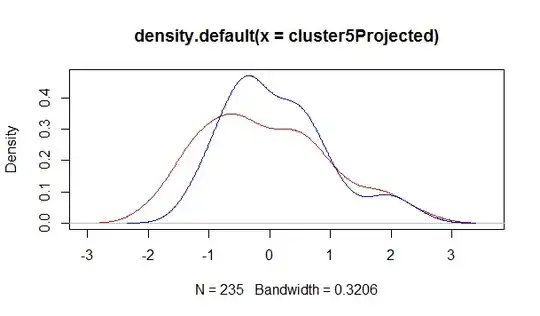

The probability densities of both my distributions are as shown in the below figure,

Note that, the N=235 refers to the bigger distribution size and doesn't imply that both distributions are of size 235.

Please suggest some way that I can use Kullback-Leibler divergence for this problem. Some input on other methods/tests which can be used for comparison will be appreciated as well.