My question is related to Winstein's explanation of the difference between confidence intervals and credible intervals. Please pardon my (at best) amateur rambling, but this has stumped me thoroughly for the past 5 hours with no realisation in sight!

[Link to Keith Winstein's original post: What's the difference between a confidence interval and a credible interval?

My intuition leads me straight to agreement with Bayesia's solution -- it just seems like the common-sense (and, dare I say, only) way to give a range of jars that'll make sure you have a 70% success rate. (Or am I confusing the two schools here?)

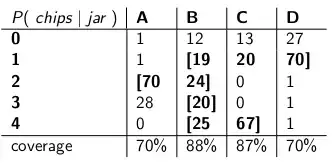

My biggest trouble with the example is how he calculates the confidence intervals by adding up jars (horizontally) from the vertically calculated probabilities. What on earth is going on here?

It looks to me as if the table is trying to answer "given that I have Jar B, what range of number-of-chips can I guess that'll get me right coverage 70% of the time?" -- NOT "given that I specify Jars B,C,D as the range, what is the percentage of times that I'll be right about the coverage when it comes to 3-chip cookies?" which is what the example seems to be about.

What am I missing here?

Thanks for your time!