I have a set of precise measurements, and what I want to do is count the frequency (how many time it appears) for each value.

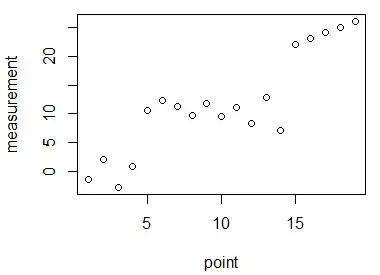

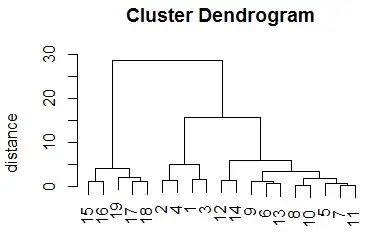

The problem is that these are very precise measurements and with a naive method I would end up probably with every value at frequency one. So I need a method to cluster similar numbers and count them as the same values. The problem is that it should be done "dynamically", we can't just set some sort of interval for clusters because for example we would like to have these kind of result:

Data: 1, 2, 3, 4, 5, 6, 7, 8, 9 -> 1 and 2 are in different clusters

Data: 1, 2, 100, 200, 300, 400 -> 1 and 2 are in the same cluster

I have found some papers on computing similarities or some pattern recognition algorithm, but I really can't imagine how I should apply them. I am pretty convinced that from the set of initial data traditional statistics should be able to help us cluster these values. By the way I will eventually have to implement this in Python so no R (or other) magic please :) !