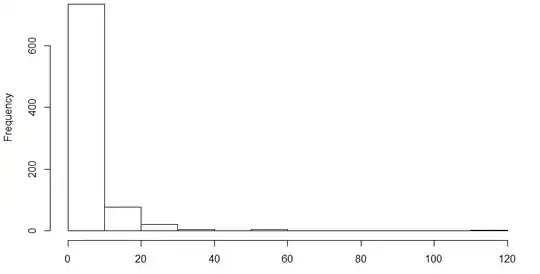

I want to run a simple regression predicting score on some task, from number of minutes spent doing another activity. My N is ~800. The score variable is normally distributed and measured in percentages, but the minutes spent variable is highly positively skewed (and measured in minutes), to the point where it may be considered discrete. The histogram of that variable looks like this:

Ultimately, I want to be able to interpret the regression coefficient associated with minutes spent. What is the most ideal approach to building a model that can ensure my Type 1 error rates are under control?

- Would bootstrapping the variable fix this issue given most of the values hover around 0?

- Would a more suitable option be to log transform minutes spent and running a t test on the coefficient like normal? How does one then interpret the coefficient?

- Does my predictor variable (score) being a percentage change the model I should use?

I see a similar thread here: Should I use t-test on highly skewed and discrete data?, but I guess I'm specifically wondering about the viability of bootstrapping vs. log transformations for this problem