If the values of X1 and X2 are positive and positively correlated with Y, then of course X1*X2 will be significant when used alone in a model: it is positively correlated with both X1 and X2 and therefore should be correlated with Y. But that tells us nothing.

Let's look at a small example using the following data:

X1 X2 Y

14.041 13.6205 25.6893

17.1413 14.2088 32.3733

18.2874 16.1873 34.261

18.285 14.6539 31.8483

13.9504 13.6742 26.6726

17.0211 13.9576 31.6815

15.6146 17.4936 32.7113

14.4232 16.9606 30.4182

14.8142 15.5246 31.1612

15.4794 14.4887 31.1827

10.9243 16.1642 28.1331

14.8455 15.1099 29.4972

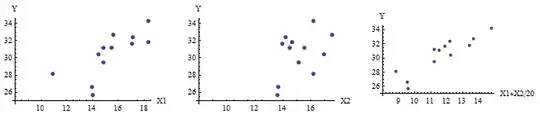

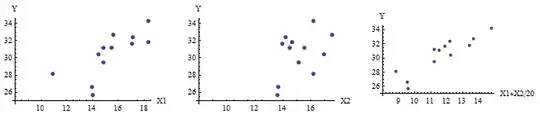

X1, X2, and X1*X2 (scaled down by 1/20 for easy plotting) are correlated with Y, but the product X1*X2 clearly is much more strongly correlated with Y than either of X1 or X2:

(Correlation is a useful way to describe these relationships because all three plots appear sufficiently linear and homoscedastic.)

Let's do some regression.

Y ~ X1*X2. The p-value is 0.000072 and the mean square residual is 1.37.

Y ~ X1 + X2. The p-values are .00041 and .00038, respectively. The mean square residual is 1.33. The individual p-values are not as low as in the preceding model (if you care about such things) and the mean square residual is only a tiny bit lower, despite using two variables instead of 1.

Y ~ X1 + X2 + X1*X2. The p-values are .00033, .0028, and 0.129, respectively. The interaction (X1*X2) is not significant. (The mean square error has improved to 1.098, though.)

This perfectly conforms to the description of the problem: the significance of the interaction goes away when accompanied by X1 and X2 in the model.

What is going on? I generated these data by drawing X1 and X2 independently from a Normal(15,2) distribution and then forming Y by adding Normal(0,1) error to X1 + X2. In other words, apart from that error, Y depends linearly on X1 and X2; there is no interaction.

We can understand this geometrically: the plot of a surface of the form $Y = \alpha X_1 + \beta X_2$, for positive $\alpha, \beta, X_1, X_2$, is a plane; the plot of a surface of the form $Y = \gamma X_1 X_2$ ($\gamma$ positive) is a very flat hyperboloid of one sheet, assuming $X_1$ and $X_2$ stay away from $0$. That hyperboloid can be a more than adequate approximation to the plane (first regression) which in turn might be a better model for $Y$.

As others have explained at length in the referenced thread, it is rare that you can interpret an interaction by itself (regression 1); you need to include it in the context of the linear terms (regression 3) or formulate a different model altogether (as described in my reply in the referenced thread).