SVM, both for classification and regression, are about optimizing a function via a cost function, however the difference lies in the cost modeling.

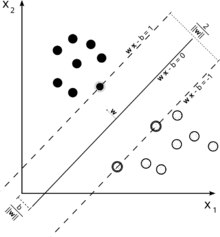

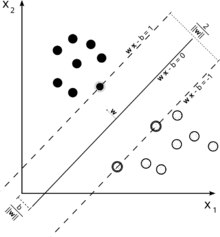

Consider this illustration of a support vector machine used for classification.

Since our goal is a good separation of the two classes, we try to formulate a boundary that leaves as wide a margin as possible between the instances that are closest to it (support vectors), with instances falling into this margin being a possibility, altough incurring a high cost (in the case of a soft margin SVM).

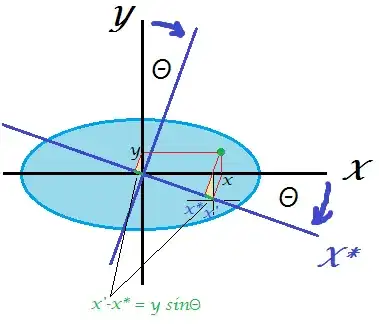

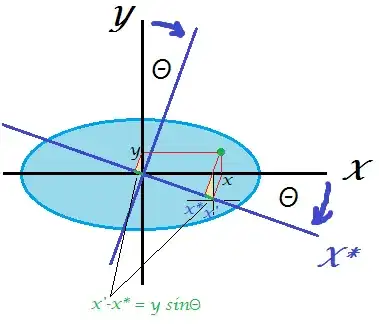

In the case of regression, the goal is to find a curve that minimizes the deviation of the points to it. With SVR, we also use a margin, but with an entirely different goal - we don't care about instances that lie within a certain margin around the curve, because the curve fits them somewhat well. This margin is defined by the parameter $\epsilon$ of the SVR. Instances that fall within the margin do not incur any cost, that's why we refer to the loss as 'epsilon-insensitive'.

For both sides of the decision function we define a slack variable each, $\xi_+, \xi_-$, to account for deviations outside of the $\epsilon$-zone.

This gives us the optimization problem (see E. Alpaydin, Introduction to Machine Learning, 2nd Edition)

$$min \frac{1}{2} ||w||^2 + C\sum_{t} (\xi_+ + \xi_-)$$

subject to

$$r^t - (\textbf{w}^T \textbf{x} + w_0) \leq \epsilon + \xi_{+}^{t}\\

(\textbf{w}^T \textbf{x} + w_0)-r^t \leq \epsilon + \xi_{-}^{t}\\

\xi_{+}^{t},\xi_{-}^{t} \geq 0$$

Instances outside the margin of a regression SVM incur costs in the optimization, so aiming to minimize this cost as part of the optimization refines our decision function, but in fact does not maximize the margin as it would be the case in SVM classification.

This should have answered the first two parts of your question.

Regarding your third question: as you might have picked up by now, $\epsilon$ is an additional parameter in the case of SVR. The parameters of a regular SVM still remain, so the penalty term $C$ as well as other parameters that are required by the kernel, like $\gamma$ in case of the RBF kernel.