I think the authors concede to that as well. Quoting them,

One particular instantiation of a memory network is where the components are neural networks.

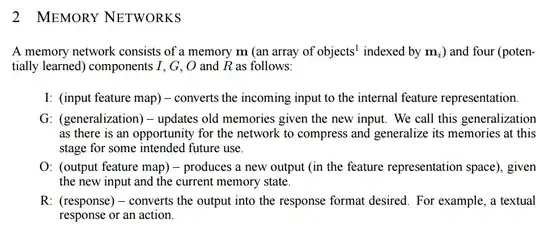

Memory Networks are essentially some kind of a lookup table if you will. But for Memory Networks to work well, it is crucial that there is some kind of a generalization across different memory cells. In this work, that generalization is achieved by using Vector Embeddings, which is technically one layer of a neural network. The following are the Artificial Neural Network (ANN) components that can be used in tandem with the 4 main functions that they define.

- $I:$ (Input feature map) - This component can use any sort of input embedding. Word2vec vectors of the words could be one example. You could alternatively use a matrix $U$ which maps the bag of words representation to a more dense representation. In this work, they just use the bag of words representation as the input and leave the embedding part to the other modules, as will be clear in a moment.

- $G:$ (Generalization) - This component is responsible for reflecting changes in the memory, given a new input. If each input has it's own slot, it does not lead to much generalization. The Hashing function mentioned in section

3.3 is not a generalization because it is just a trick to ensure less computation. Whenever generalization is required, there should be lesser parameters (degrees of freedom) than the number of instances. Updating other memory cells based on current input may lead to generalization and may involve Neural Networks in terms of Forget and Input gates used in LSTMs.

- $O:$ (Output feature map) - As is clear from the work, weight matrices are used for linear transformations and these are essentially single layer neural networks.

- $R:$ (response) - After choosing which memory cells to base the answer on, an RNN (or LSTM) is used to generate natural language answers.

I agree with the OP that all these components can be used without Neural Networks as well. But these single layer NNs used throughout can be used as a justification. There should be further work in improving the generalization ($G$) and I think there is scope for using ANNs there as well.