I'm conducting a Two-Way ANOVA with my two factors being Sex and Cohort. I have data from two cohorts of subjects, with each cohort consisting of males and females that were measured on one response variable. (Because of some exclusions, there are unequal sample sizes between groups.)

Prior to running the ANOVA, my understanding is that I must test the data for normality and homogeneity of variance (HOV).

Do I test for normality and HOV in each of the four groups separately? (i.e. test for normality in data from cohort 1 males only, then test for normality in data from cohort 1 females only, then cohort 2 males, then cohort 2 females?)

Does the assumption of HOV apply to all four groups, i.e. The null hypothesis is "Cohort 1 male variance = Cohort 1 female variance = Cohort 2 male variance = Cohort 2 female variance?"

I used the Shapiro-Wilk test for normality in each group, and Levene's test of equality of error variances. Unfortunately, in all groups, the data are very non-normal and give highly significant values for Levene's test. I have tried several transformations (square root, log, natural log, square) but nothing has worked to normalize the data so far.

I'm wondering how to proceed? I've read that unlike Welch's test for a one-way ANOVA, there is no good two-way ANOVA equivalent for non-normal data with heterogeneous variances.

Are there any other transformations that could work? If not, would the best option be to simply run the ANOVA, but mention that assumptions were violated that may impact the test results?

EDIT (to add more information):

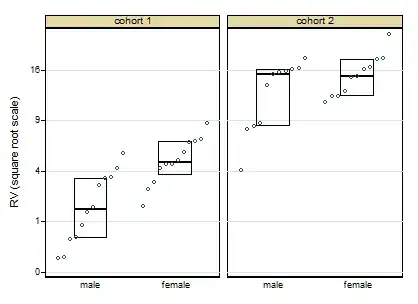

To clarify, the main issue is lack of homogeneity of variance for the Two-Way ANOVA. I had previously written that the transformations did not work to normalize the data -- I was mistaken (my apologies!). The data were very positively skewed (kurtosis was not really an issue), and the square root transformation successfully normalized the distribution. However, I still have heterogeneous variances. I'm wondering if there's anything I can do to correct this, or if it's acceptable to go ahead with the ANOVA, and explicitly mention the heterogeneous variances in the description of my methods?

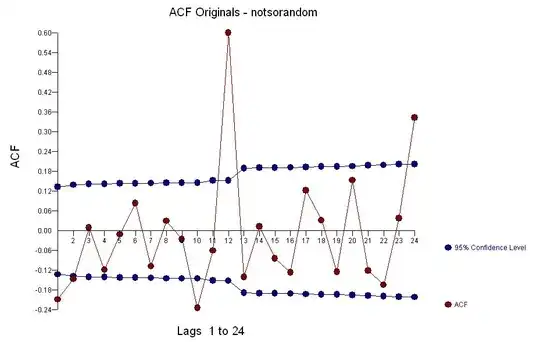

EDIT 2 (images added):

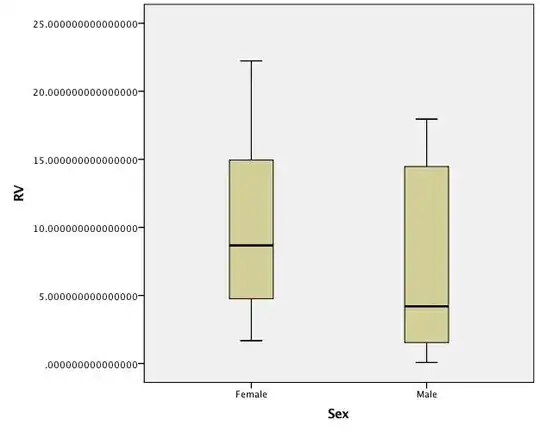

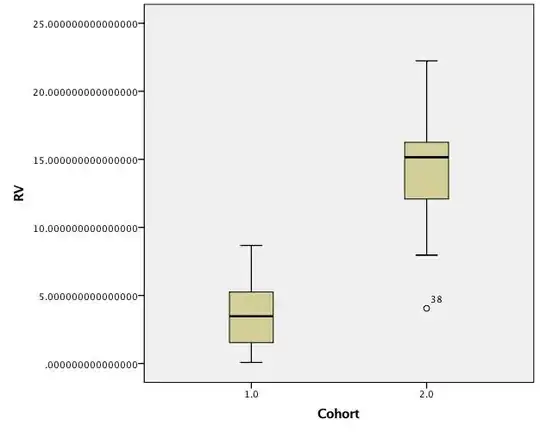

Boxplots of untransformed data:

EDIT 3 (raw data added):

**Cohort 1 males (n=12)**:

0.476

0.84

1.419

0.4295

0.083

2.9595

4.20125

1.6605

3.493

5.57225

0.076

3.4585

**Cohort 1 females (n=12)**:

4.548333

4.591

3.138

2.699

6.622

6.8795

5.5925

1.6715

4.92775

6.68525

4.25775

8.677

**Cohort 2 males (n=11)**:

7.9645

16.252

15.30175

8.66325

15.6935

16.214

4.056

8.316

17.95725

13.644

15.76475

**Cohort 2 females (n=11)**:

11.2865

22.22775

18.00466667

12.80925

16.15425

14.88133333

12.0895

16.5335

17.68925

15.00425

12.149