I have made a recording of two different sounds and I want to use an SVM in order to be able to distinct between the two. The process I have followed is:

Divided each sound in multiple 20ms frames.

For every frame I calculate the MFCCs, deltas and deltas-deltas (in total 48 coefficients that act as features)

- I create a label for each sound (e.g 0 and 1)

- Using the cross_validation.train_test_split function from Sklearn, I create my feature_test, feature_train, label_train and label_test datasets.

- I train my SVM using the RBF kernel and the train datasets mentioned in the above step.

- I make a prediction based on the test datasets of step 4

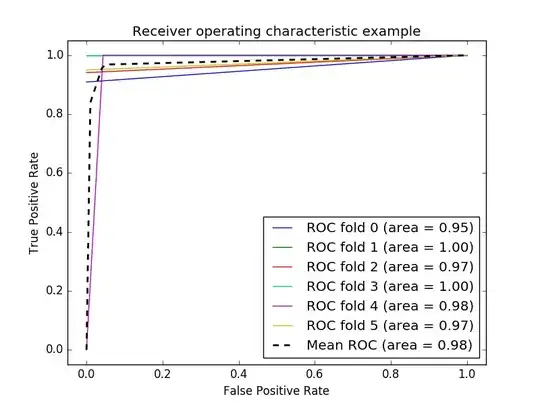

My question is the following. How do I know that what I get from the score of the SVM is correct? How can I know that my classifier does not overfit? I have used cross-validation as well and I have calculated the ROC curve but I don't know if I should trust the results. I am attaching the image and the results from some metrics.

Metrics

Accuracy of SVM = 1

F1-score of SVM = 1