The simple and elegant way to estimate $e$ by Monte Carlo is described in this paper. The paper is actually about teaching $e$. Hence, the approach seems perfectly fitting for your goal. The idea's based on an exercise from a popular Ukrainian textbook on probability theory by Gnedenko.

See ex.22 on p.183

It happens so that $E[\xi]=e$, where $\xi$ is a random variable that is defined as follows. It's the minimum number of $n$ such that $\sum_{i=1}^n r_i>1$ and $r_i$ are random numbers from uniform distribution on $[0,1]$. Beautiful, isn't it?!

Since it's an exercise, I'm not sure if it's cool for me to post the solution (proof) here :) If you'd like to prove it yourself, here's a tip: the chapter is called "Moments", which should point you in right direction.

If you want to implement it yourself, then don't read further!

This is a simple algorithm for Monte Carlo simulation. Draw a uniform random, then another one and so on until the sum exceeds 1. The number of randoms drawn is your first trial. Let's say you got:

0.0180

0.4596

0.7920

Then your first trial rendered 3. Keep doing these trials, and you'll notice that in average you get $e$.

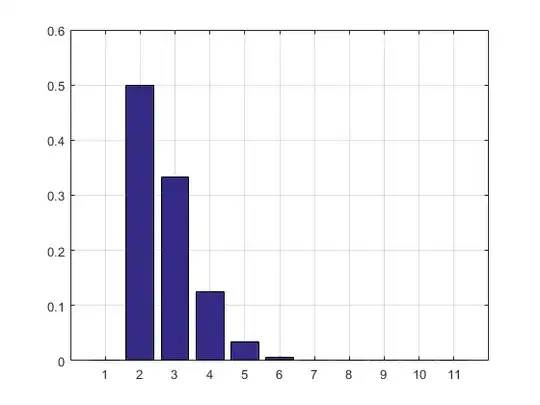

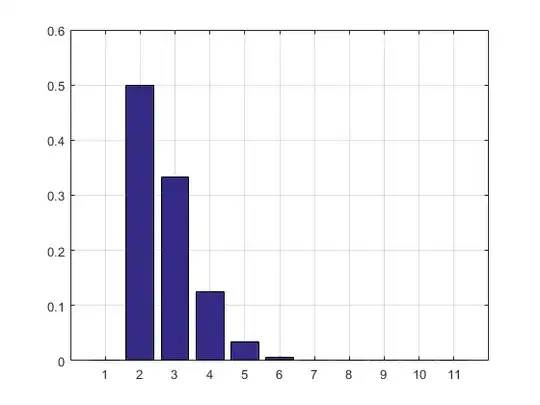

MATLAB code, simulation result and the histogram follow.

N = 10000000;

n = N;

s = 0;

i = 0;

maxl = 0;

f = 0;

while n > 0

s = s + rand;

i = i + 1;

if s > 1

if i > maxl

f(i) = 1;

maxl = i;

else

f(i) = f(i) + 1;

end

i = 0;

s = 0;

n = n - 1;

end

end

disp ((1:maxl)*f'/sum(f))

bar(f/sum(f))

grid on

f/sum(f)

The result and the histogram:

2.7183

ans =

Columns 1 through 8

0 0.5000 0.3332 0.1250 0.0334 0.0070 0.0012 0.0002

Columns 9 through 11

0.0000 0.0000 0.0000

UPDATE:

I updated my code to get rid of the array of trial results so that it doesn't take RAM. I also printed the PMF estimation.

Update 2:

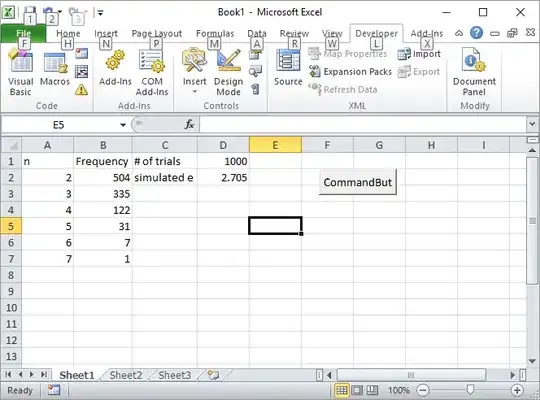

Here's my Excel solution. Put a button in Excel and link it to the following VBA macro:

Private Sub CommandButton1_Click()

n = Cells(1, 4).Value

Range("A:B").Value = ""

n = n

s = 0

i = 0

maxl = 0

Cells(1, 2).Value = "Frequency"

Cells(1, 1).Value = "n"

Cells(1, 3).Value = "# of trials"

Cells(2, 3).Value = "simulated e"

While n > 0

s = s + Rnd()

i = i + 1

If s > 1 Then

If i > maxl Then

Cells(i, 1).Value = i

Cells(i, 2).Value = 1

maxl = i

Else

Cells(i, 1).Value = i

Cells(i, 2).Value = Cells(i, 2).Value + 1

End If

i = 0

s = 0

n = n - 1

End If

Wend

s = 0

For i = 2 To maxl

s = s + Cells(i, 1) * Cells(i, 2)

Next

Cells(2, 4).Value = s / Cells(1, 4).Value

Rem bar (f / Sum(f))

Rem grid on

Rem f/sum(f)

End Sub

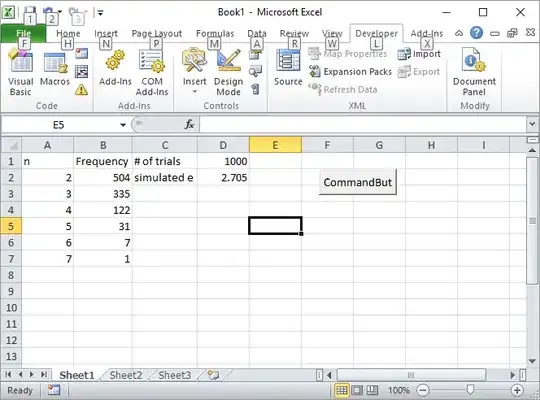

Enter the number of trials, such as 1000, in the cell D1, and click the button.

Here how the screen should look like after the first run:

UPDATE 3:

Silverfish inspired me to another way, not as elegant as the first one but still cool. It calculated the volumes of n-simplexes using Sobol sequences.

s = 2;

for i=2:10

p=sobolset(i);

N = 10000;

X=net(p,N)';

s = s + (sum(sum(X)<1)/N);

end

disp(s)

2.712800000000001

Coincidentally he wrote the first book on Monte Carlo method I read back in high school. It's the best introduction to the method in my opinion.

UPDATE 4:

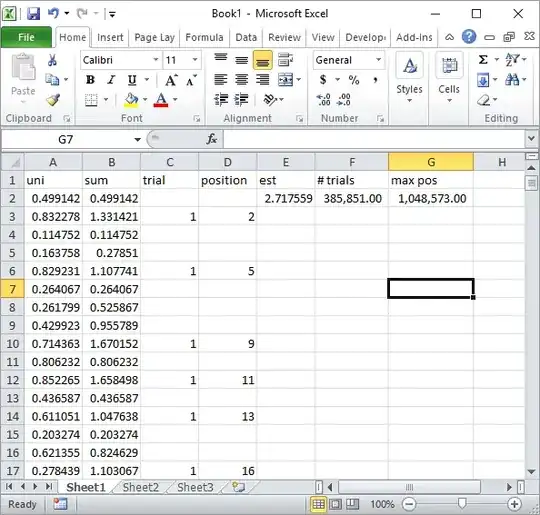

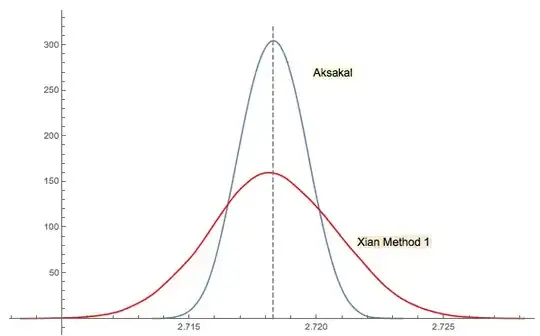

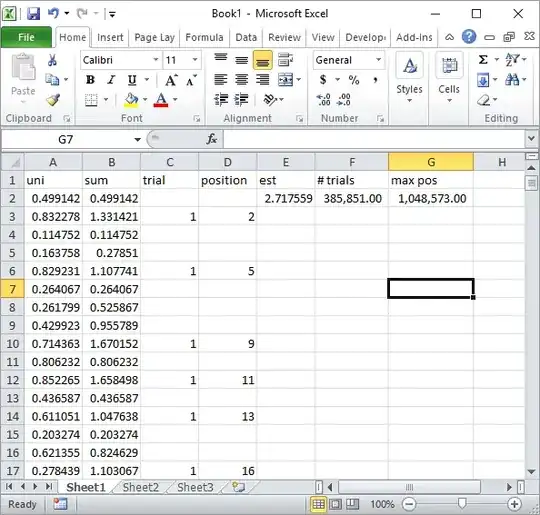

Silverfish in comments suggested a simple Excel formula implementation. This is the kind of result you get with his approach after about total 1 million random numbers and 185K trials:

Obviously, this is much slower than Excel VBA implementation. Especially, if you modify my VBA code to not update the cell values inside the loop, and only do it once all stats are collected.

UPDATE 5

Xi'an's solution #3 is closely related (or even the same in some sense as per jwg's comment in the thread). It's hard to say who came up with the idea first Forsythe or Gnedenko. Gnedenko's original 1950 edition in Russian doesn't have Problems sections in Chapters. So, I couldn't find this problem at a first glance where it is in later editions. Maybe it was added later or buried in the text.

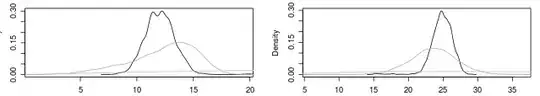

As I commented in Xi'an's answer, Forsythe's approach is linked to another interesting area: the distribution of distances between peaks (extrema) in random (IID) sequences. The mean distance happens to be 3. The down sequence in Forsythe's approach ends with a bottom, so if you continue sampling you'll get another bottom at some point, then another etc. You could track the distance between them and build the distribution.