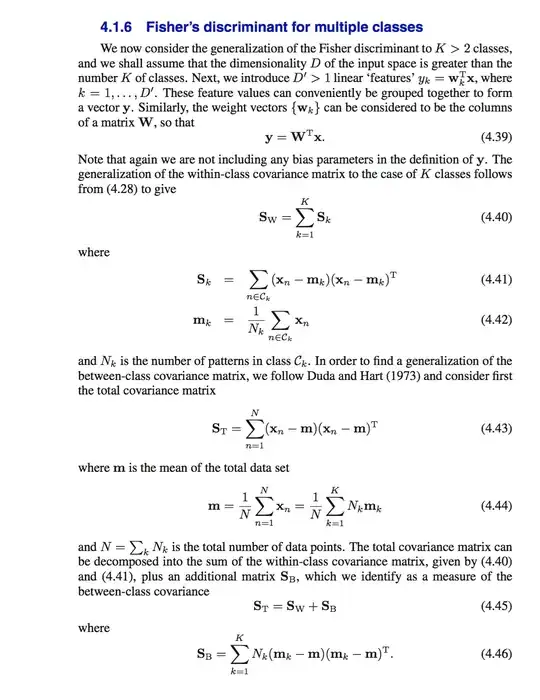

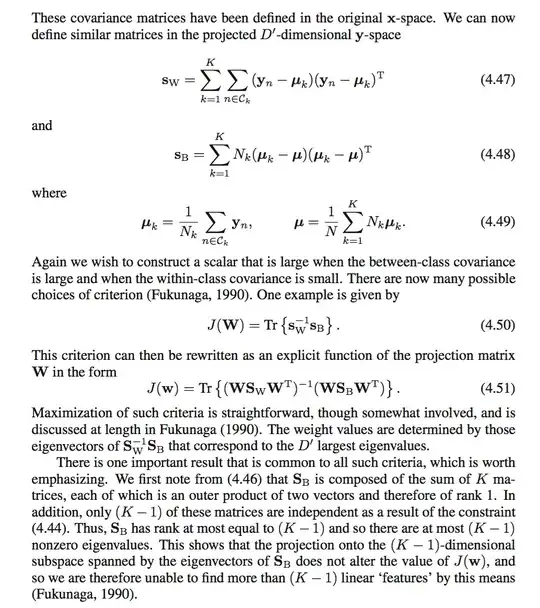

According to Bishop's Machine Learning and Pattern Recognition, the cost function for linear discriminant analysis (LDA) with $K>2$ classes is $$J(\mathbf w) = \mathrm{Tr}\left\{\left(\mathbf W \mathbf S_W \mathbf W^T\right)^{-1} \left(\mathbf W \mathbf S_B \mathbf W^T\right)\right\}.$$ It is stated in the text:

$\mathbf{S}_\mathrm B = \sum_{k=1}^K N_k(\mathbf m_k - \mathbf m)(\mathbf m_k - \mathbf m)^T$ is composed of the sum of $K$ matrices, each of which is an outer product of two vectors and therefore of rank 1. In addition, only $(K-1)$ of these matrices are independent and as a result of the constraint $$\mathbf m = \frac{1}{N}\sum_{n=1}^N \mathbf x_n = \frac{1}{N} \sum_{k=1}^K N_k \mathbf m_k.$$ Thus, $\mathbf S_\mathrm B$ has rank at most equal to $(K-1)$ and so there are at most $(K-1)$ nonzero eigenvalues. This shows that the projection onto the $(K-1)$-dimensional subspace spanned by the eigenvalues of $\mathbf S_\mathrm B$ does not alter the value of $J(\mathbf w)$, and so we are therefore unable to find more than $(K-1)$ linear 'features' by this means (Fukunaga, 1990).

Question

- Why does the constraint cause the matrix to have only $(K-1)$ matrices?

- I can't see how the projection does not alter the value of $J(\mathbf w)$ and therefore we are unable to find $(K-1)$ linear features.

- What does it imply when we can't find $(K-1)$ linear features? I can't see why the author is telling me this.

(Can you please make the math explicit? I find the textbook rather hard to understand because many math details are left out).