Maybe what makes you think of ReLU is the hinge loss $E = max(1-ty,0)$ of SVMs, but the loss does not restrict the output activation function to be non-negative (ReLU).

For the network loss to be in the same form as SVMs, we can just remove any non-linear activation functions off the output layer, and use the hinge loss for backpropagation.

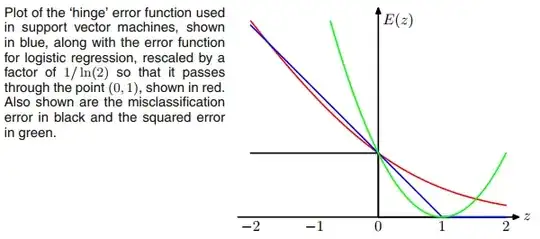

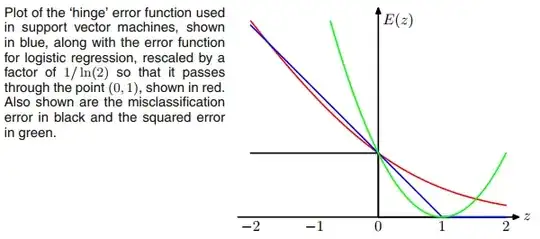

Moreover, if we replace the hinge loss with $E = ln (1 + exp(−ty))$ (which looks like a smooth version of hinge loss), then we'll be doing logistic regression as typical sigmoid + cross-entropy networks. It can be thought of as moving the sigmoid function from the output layer to the loss.

So in terms of loss functions, SVMs and logistic regression are pretty close, though SVMs use a very different algorithm for training and inference based on support vectors.

There's a nice discussion on the relation of SVM and logistic regression in section 7.1.2 of the book Pattern Recognition and Machine Learning.