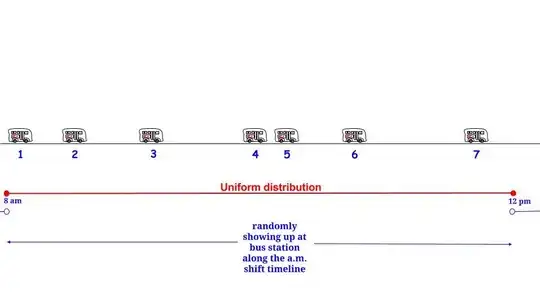

I'm trying to learn about neural networks using data series prediction as case study. I've set up a small application to try different configurations of data smoothing and input neuron count. However... No matter what I do I observe a delay in the neural nets output. As the picture below illustrates quite good, the outputs from the network (red) is very well predicted and is nicely matching the actual value (blue), only a bit late...

I've even tried to use the training data as test data to make sure there wasn't any problems with my test data preparation.

Anyone know what the problem might be?

I'm using 1 output neuron and training with Back Propagation. Bipolar sigmoid is used as activation function.