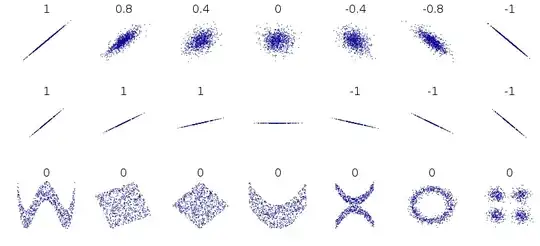

In explaining why uncorrelated does not imply independent, there are several examples that involve a bunch of random variables, but they all seem so abstract: 1 2 3 4.

This answer seems to make sense. My interpretation: A random variable and its square may be uncorrelated (since apparently lack of correlation is something like linear independence) but they are clearly dependent.

I guess an example would be that (standardised?) height and height$^2$ might be uncorrelated but dependent, but I don't see why anyone would want to compare height and height$^2$.

For the purpose of giving intuition to a beginner in elementary probability theory or similar purposes, what are some real-life examples of uncorrelated but dependent random variables?