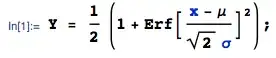

Consider the function $Y = 1 - 2 \Phi((c_j - \mu)/\sigma) + 2 \Phi^2((c_j - \mu)/\sigma)$

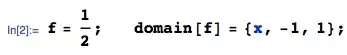

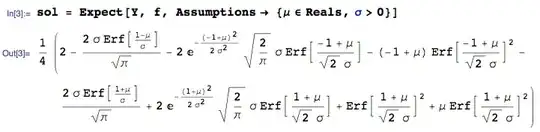

where $\Phi$ is the cumulative distribution function for the standard normal distribution and $c_j$ is a uniformly distributed random variable on the range -1 to 1. I would like to be able to express the expected value of $Y$ in terms of $\mu$ and $\sigma$.

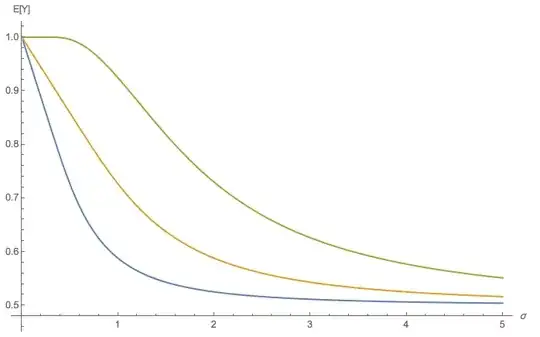

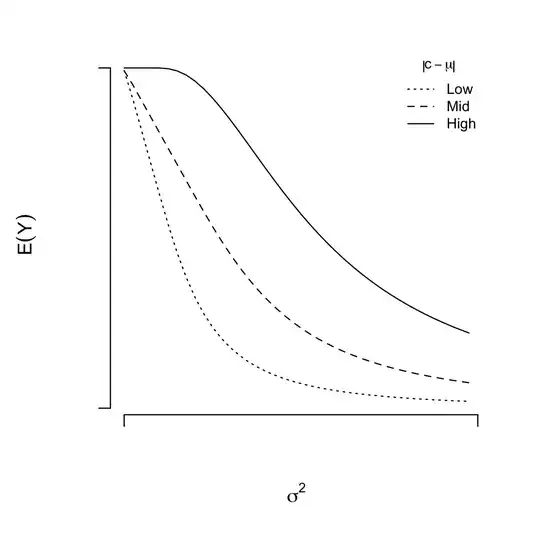

It seems clear that $E(Y)$ is increasing in the distance between $E[c_j]$ and $\mu$, and decreasing as $\sigma$ increases. However, I can also see (from simulating) that the effect of $\sigma$ on $Y$ is dependent on $c_j - \mu$. This suggests that there is an interaction between $\sigma$ and $\mu$.

My question is whether there is a way of describing this relationship analytically? I'm afraid that I'm a bit useless at this, and although the simulated results might be sufficient for my purposes, I would like to be able to show this a little more concisely, and suspect I am missing something obvious. Any suggestions appreciated!

The plot below shows the results of the simulation:

Notes: in the simulation I am treating $c_j$ as uniformly distributed, varying the values of $\sigma$ and $\mu$ and simply plugging them into the formula above before taking an average.