This question can be answered as stated only by assuming the two random variables $X_1$ and $X_2$ governed by these distributions are independent. This makes their difference $X = X_2-X_1$ Normal with mean $\mu = \mu_2-\mu_1$ and variance $\sigma^2=\sigma_1^2 + \sigma_2^2$. (The following solution can easily be generalized to any bivariate Normal distribution of $(X_1, X_2)$.) Thus the variable

$$Z = \frac{X-\mu}{\sigma} = \frac{X_2 - X_1 - (\mu_2 - \mu_1)}{\sqrt{\sigma_1^2 + \sigma_2^2}}$$

has a standard Normal distribution (that is, with zero mean and unit variance) and

$$X = \sigma \left(Z + \frac{\mu}{\sigma}\right).$$

The expression

$$|X_2 - X_1| = |X| = \sqrt{X^2} = \sigma\sqrt{\left(Z + \frac{\mu}{\sigma}\right)^2}$$

exhibits the absolute difference as a scaled version of the square root of a Non-central chi-squared distribution with one degree of freedom and noncentrality parameter $\lambda=(\mu/\sigma)^2$. A Non-central chi-squared distribution with these parameters has probability element

$$f(y)dy = \frac{\sqrt{y}}{\sqrt{2 \pi } } e^{\frac{1}{2} (-\lambda -y)} \cosh \left(\sqrt{\lambda y} \right) \frac{dy}{y},\ y \gt 0.$$

Writing $y=x^2$ for $x \gt 0$ establishes a one-to-one correspondence between $y$ and its square root, resulting in

$$f(y)dy = f(x^2) d(x^2) = \frac{\sqrt{x^2}}{\sqrt{2 \pi } } e^{\frac{1}{2} (-\lambda -x^2)} \cosh \left(\sqrt{\lambda x^2} \right) \frac{dx^2}{x^2}.$$

Simplifying this and then rescaling by $\sigma$ gives the desired density,

$$f_{|X|}(x) = \frac{1}{\sigma}\sqrt{\frac{2}{\pi}} \cosh\left(\frac{x\mu}{\sigma^2}\right) \exp\left(-\frac{x^2 + \mu^2}{2 \sigma^2}\right).$$

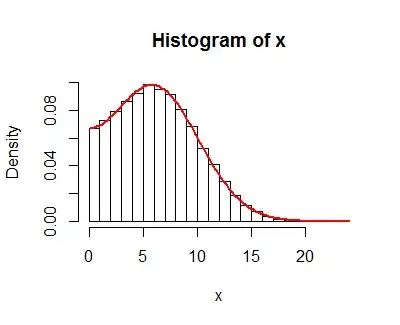

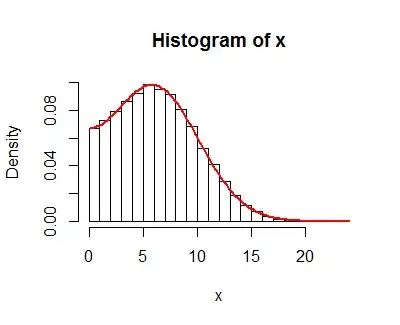

This result is supported by simulations, such as this histogram of 100,000 independent draws of $|X|=|X_2-X_1|$ (called "x" in the code) with parameters $\mu_1=-1, \mu_2=5, \sigma_1=4, \sigma_2=1$. On it is plotted the graph of $f_{|X|}$, which neatly coincides with the histogram values.

The R code for this simulation follows.

#

# Specify parameters

#

mu <- c(-1, 5)

sigma <- c(4, 1)

#

# Simulate data

#

n.sim <- 1e5

set.seed(17)

x.sim <- matrix(rnorm(n.sim*2, mu, sigma), nrow=2)

x <- abs(x.sim[2, ] - x.sim[1, ])

#

# Display the results

#

hist(x, freq=FALSE)

f <- function(x, mu, sigma) {

sqrt(2 / pi) / sigma * cosh(x * mu / sigma^2) * exp(-(x^2 + mu^2)/(2*sigma^2))

}

curve(f(x, abs(diff(mu)), sqrt(sum(sigma^2))), lwd=2, col="Red", add=TRUE)