One way to summarize the comparison of two survival curves is to compute the hazard ratio (HR). There are (at least) two methods to compute this value.

- Logrank method. As part of the Kaplan-Meier calculations, compute the number of observed events (deaths, usually) in each group ($Oa$, and $Ob$), and the number of expected events assuming a null hypothesis of no difference in survival ($Ea$ and $Eb$). The hazard ratio then is: $$ HR= \frac{(Oa/Ea)}{(Ob/Eb)} $$

- Mantel-Haenszel method. First compute V, which is the sum of the hypergeometric variances at each time point. Then compute the hazard ratio as: $$ HR= \exp\left(\frac{(Oa-Ea)}{V}\right) $$ I got both these equations from chapter 3 of Machin, Cheung and Parmar, Survival Analysis. That book states that the two methods usually give very similar methods, and indeed that is the case with the example in the book.

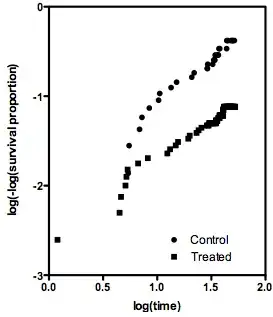

Someone sent me an example where the two methods differ by a factor of three. In this particular example, it is obvious that the logrank estimate is sensible, and the Mantel-Haenszel estimate is far off. My question is if anyone has any general advice for when it is best to choose the logrank estimate of the hazard ratio, and when it is best to choose the Mantel-Haenszel estimate? Does it have to do with sample size? Number of ties? Ratio of sample sizes?