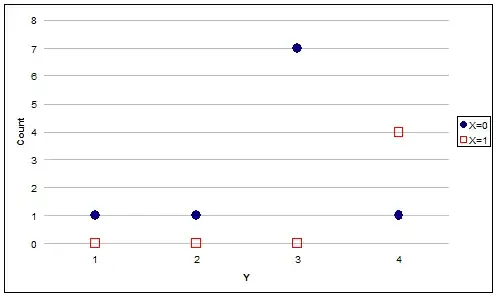

The distribution of $X$ is not an assumption in OLS regression. Thus the 'clustering' in $X$ is not necessarily a problem.

In addition, data tend to have less leverage* over the fitted regression line the closer they are to the mean of $X$. I note that the high density cluster is roughly where the mean would have been if $X$ had been uniformly distributed. Therefore, if you are worried that the cluster will have too much influence, I suspect those worries are misplaced and no weighting need be done.

On the other hand, if you are worried the cluster won't have enough influence (I don't interpret this as your concern), then you could use WLS (weighted least squares). You would develop a weighting scheme from some prior theoretical understanding that would make the clustered points more influential than the more sparse points to the sides.

For example, if you repeatedly sample the same x-value ($x_j$), you should get an ever better approximation of the vertical position of $f(x_j)$. As a general rule of study design, you should try to oversample locations in $X$ where you are most interested in knowing $f(X)$. However, note that if you oversample in an area you don't care about and undersample where you are primarily interested, and your functional form is misspecified (e.g., you include only a linear term, when a quadratic is required), then you could end up with biased estimates of $f(X)$ in your locations of interest.

If you are worried that the nature of the function $f(X)$ might be different inside the cluster than outside it, @user777's suggestion to use splines will take care of that.

* Also see my answer here: Interpreting plot.lm().