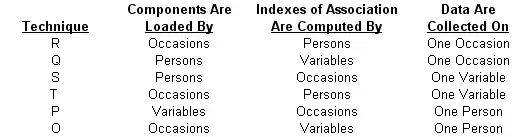

I have some doubts on Q-mode and R-mode principal component analysis (PCA). I've read from different sources that:

- Q-mode PCA is equivalent to R-mode PCA of the transposed data matrix!

- Q-mode PCA (with squared Euclidean distance) is equivalent to R-mode PCA (of the covariance matrix)!

It seems to me that these two are not equivalent statements. Can someone clarify that? Q-mode(SEuclid) = R-mode(covar) is the only instance where (proper) Q-mode and R-mode PCAs give the same results?

If I perform an R-mode PCA on the transposed data matrix, wouldn't I work on a $n<p$ matrix? Would it be ok to perform a PCA in that case? If yes, what's the difference from performing R-mode PCA on a normal $n<p$ data matrix? If no, do I need more variables than observations for running Q-mode PCA?

p`, in my comment). Programmically, however, if you do PCA via eigen-decomposition it will be slower for `n

p`. Therefore it is often advised to transpose the data first. Then, the eigenvalues are the same but the eigenvectors are left (row), not right (clolumn) ones, and so right eigenvectors are to compute indirectly (which is simple). This theme was discussed on this site numerous times.

– ttnphns Dec 02 '15 at 15:22