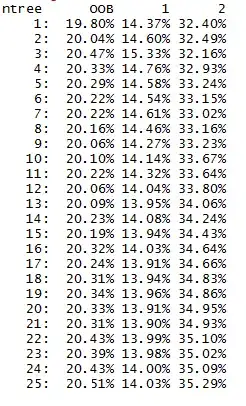

I am running a random forest on a binary classification variable using about 30 explanatory variables. Please have a look at the screenshot below. You see that the out-of-bag errors for class 2 increases as the number of trees increases. This looks weird, as I expect the out of bag error to decrease as more trees are added.

Can somebody explain this or point me in the right direction?