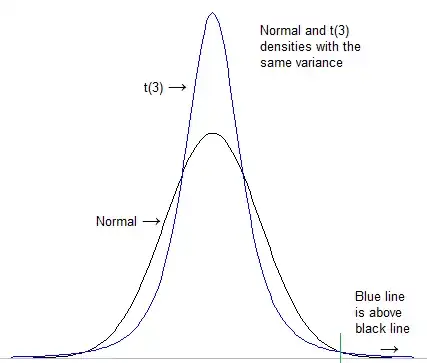

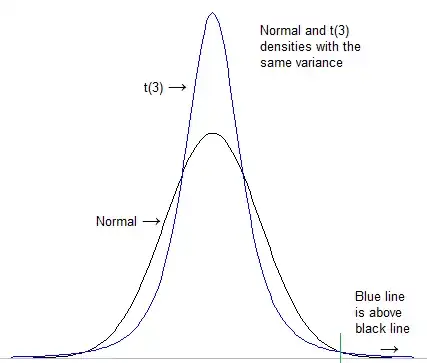

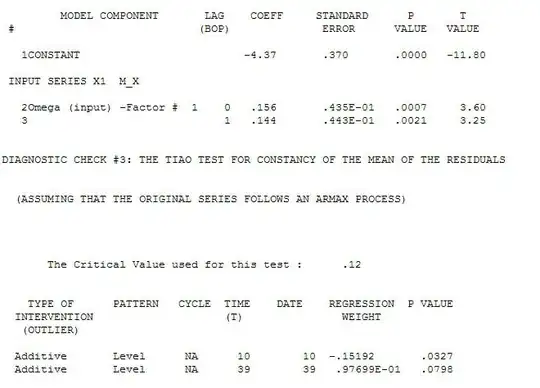

The first thing to do is to formalize what we mean by "heavier tail". One could notionally look at how high the density is in the extreme tail after standardizing both distributions to have the same location and scale (e.g. standard deviation):

(from this answer, which is also somewhat relevant to your question)

[For this case, the scaling doesn't really matter in the end; the t will still be "heavier" than the normal even if you use very different scales; the normal always goes lower eventually]

However, that definition - while it works okay for this particular comparison - doesn't generalize very well.

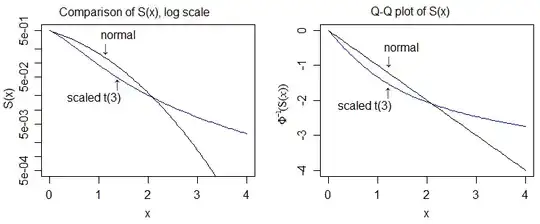

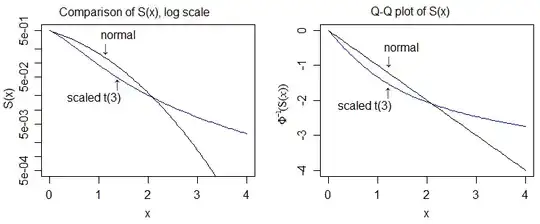

More generally, a much better definition is in whuber's answer here. So if $Y$ is heavier-tailed than $X$, as $t$ becomes sufficiently large (for all $t>$ some $t_0$), then $S_Y(t)>S_X(t)$, where $S=1-F$, where $F$ is the cdf (for heavier-tailed on the right; there's a similar, obvious definition on the other side).

Here it is on the log-scale, and on the quantile scale of the normal, which allows us to see more detail:

So then the "proof" of heavier tailedness would involve comparing cdfs and showing that the upper tail of the t-cdf eventually always lies above that of the normal and the lower tail of the t-cdf eventually always lies below that of the normal.

In this case the easy thing to do is to compare the densities and then show that the corresponding relative position of the cdfs (/survivor functions) must follow from that.

So for example if you can argue that (at some given $\nu$)

$ x^2 - (\nu+1) \log(1+\frac{x^2}{\nu}) > 2\cdot\log(k)\qquad^\dagger$

for the necessary constant $k$ (a function of $\nu$), for all $x>$ some $x_0$, then it would be possible to establish a heavier tail for $t_\nu$ also on the definition in terms of bigger $1-F$ (or bigger $F$ on the left tail).

$^\dagger$ (this form follows from the difference of the log of the densities, if that holds the necessary relationship between the densities holds)

[It's actually possible to show it for any $k$ (not just the particular one we need coming from the relevant density normalizing constants), so the result must hold for the $k$ we need.]