It really depends on your needs.

However, with regression and other "linear-model" problems (such as GLMs), the standard choice is orthogonal polynomials with respect to the observed set of $x$ values (usually just called "orthogonal polynomials" in regression-type contexts). Many packages provide them (e.g. poly in R provides such a basis - you supply x and the desired degree).

That is, if $P$ is the resulting "x-matrix" (not counting the constant column) where the columns represent the linear, quadratic etc components, then $P^\top P=I$.

Like so:

> x=sort(rnorm(10,6,2))

> P=poly(x,4)

> round(crossprod(P),8) # round to 8dp

1 2 3 4

1 1 0 0 0

2 0 1 0 0

3 0 0 1 0

4 0 0 0 1

(This property extends to the constant column if you appropriately normalize it, but it's usually left as-is, so the diagonal of $X^\top X$ would then have a $1,1$ element of $n$ rather than $1$.)

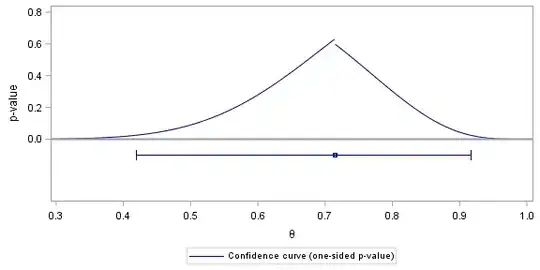

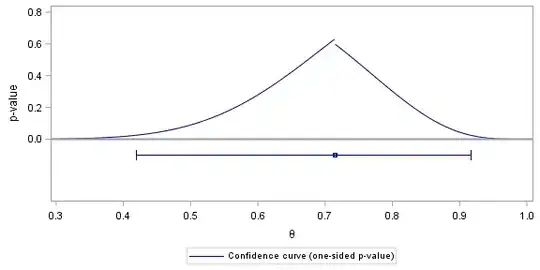

For that particular set of x-values*, they look like this:

These have a number of distinct advantages over other choices (including that the parameter estimates are uncorrelated).

Some references that may be of some use to you:

Sabhash C. Narula (1979),

"Orthogonal Polynomial Regression,"

International Statistical Review, 47:1 (Apr.), pp. 31-36

Kennedy, W. J. Jr and Gentle, J. E. (1980),

Statistical Computing, Marcel Dekker.

* on the off chance anyone cares about the particular values in the example:

x

[1] 4.326638 4.458292 4.459983 4.574794 5.312988 5.380251 7.425735

[8] 8.601912 9.189405 10.864584