I am trying to model data that clearly looks like it has seasons. However I only pick up seasonality in very small subsets of the data and only after I add in lagged variables and eliminate trend.

I first found the trend the data is best approximated by, then determined the relevant seasonal dummies (which is none), and then looked at the correlogram of my new model to determine the AR process.

Once I added in my lagged variables my trend was rendered statistically insignificant, so I took the trend out. Additionally, I thought it was weird I got no seasonality. So just on a whim I decided to to input my seasonal dummy variable after I added in my lags. And season 4 was then highly significant!

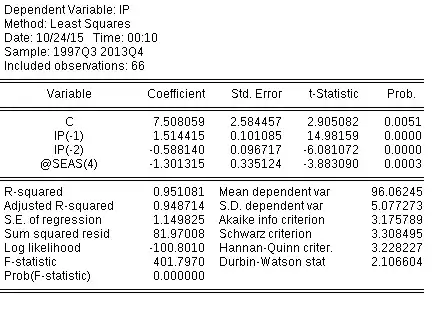

I then played around with adding in different seasons and found the model with the lowest SIC(Schwarz Information Criterion, as listed in the output below) of all the models I constructed has two lags and one seasonal dummy.

The most important question is about the role of lags in my model. Why would controlling for serial correlation first allow me to see seasonality? Does the order of controlling for certain dynamics matter? Can I control for cycles, then seasons, then trend? Or should I control these dynamics in a specific sequence?