It may be a very basic question but as a beginner in MachineLearning I still cannot figure out the answer.

In Andrew Ng's machine learning course he explained why we need feature scaling in http://www.holehouse.org/mlclass/04_Linear_Regression_with_multiple_variables.html Please look at: Gradient Decent in practice: 1 Feature Scaling

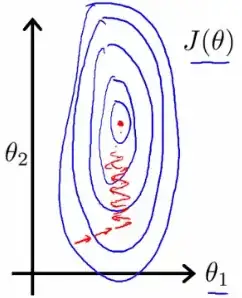

He explains that we need to make the ranges of the features similar so that the contours of cost function won't be too elongated which makes the gradient descent algorithm slow to converge. He also drew a picture as bellows

I agree that if the ranges of thetas are similar then the shapes of these contours will be more circular.

However what we scale to make similar ranges are input features, not thetas.

This indicates to me that there should be a strong correlation between the ranges of thetas and ranges of input features. If the ranges of features are similar then the ranges of thetas are also similar. However I cannot figure out why is this and how to prove it.

Could you please help if I miss anything?