The assumption that the conditional variance is equal to the unconditional variance, together with the assumption that $E(\varepsilon_i)=0$, does imply zero conditional mean, namely

$$\{{\rm Var}(\varepsilon_i \mid X_i) = {\rm Var}(\varepsilon_i)\} \;\text {and}\;\{E(\varepsilon_i)=0\}\implies E(\varepsilon_i \mid X_i)=0 \tag{1}$$

The two assumptions imply that

$$E(\varepsilon_i^2 \mid X_i) -[E(\varepsilon_i \mid X_i]^2 = E(\varepsilon_i^2)$$

$$\implies E(\varepsilon_i^2 \mid X_i) - E(\varepsilon_i^2) = [E(\varepsilon_i \mid X_i]^2$$

Ad absurdum, assume that $E(\varepsilon_i \mid X_i)\neq 0 \implies [E(\varepsilon_i \mid X_i]^2 >0$

This in turn implies that $E(\varepsilon_i^2 \mid X_i) > E(\varepsilon_i^2)$. By the law of iterated expectations we have $E(\varepsilon_i^2) = E\big[ E(\varepsilon_i^2 \mid X_i)\big]$. For clarity set $Z \equiv E(\varepsilon_i^2 \mid X_i)$. Then we have that

$$E(\varepsilon_i \mid X_i)\neq 0 \implies Z > E(Z)$$

But this cannot be since a random variable cannot be strictly greater than its own expected value. So $(1)$ must hold.

Note that the reverse is not necessarily true.

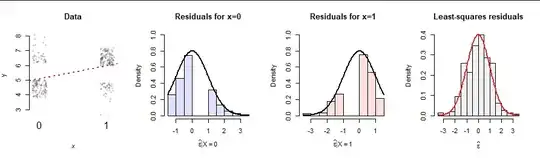

As for providing an example to show that even if the above results hold, and even under the marginal normality assumption, the conditional distribution is not necessarily identical to the marginal (which would establish independence), whuber beat me to it.