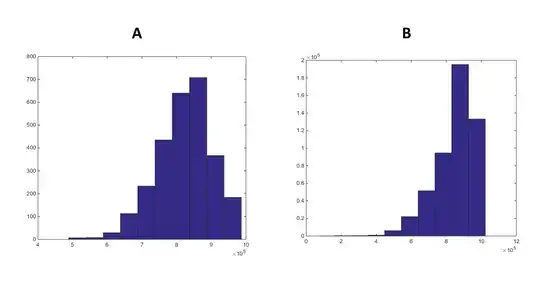

I have two variables (A and B). A has 3,000 samples; B has 500,000. They represent cumulative solar radiation (summer 2000) in Alta Valtellina (Italy), divided in two major areas (A and B) which I need to compare. Below are the histograms of my datasets:

As far as I know, a two-sample t-test won't be ideal here because my samples are too large (see my previous question). So I thought about using the Cohen's D test. Running the kstest I know the distribution of these variables is not normal (output is 1, meaning "rejecting the Null Hypothesis that data is normally distributed"). Does the Cohen's D test needs the data to be normally distributed (I could not find a reference for this)?. If so, should I have to transform my data, or would someone suggest a better test for my case?

EDIT

At present I am writing a scientific paper, and only with a good justification like a statistical test (or a statistical comparison) I woul be able (or not) to say that my datasets differ from each other at a certain degree. If they are different (what I expect from the figure above, but I might be wrong), I would use the statistics of A (which is a sample of solar radiation pixels of a large area) to classifiy the remaining pixels of my study area (if they are similar to A I would assign them the same classification of A; B otherwise).