First of all, deterministic (i.e. the same computation always yields the same result) does not imply error-free (not even free of random error!).

That being said, both ways of modeling, $y = f (x)$ as well as $x = f (y)$ are used in chemometrics. The former is known as ordinary or classical regression or calibration, the latter is referred to as inverse calibration.

Both ways of calibration have different characteristics.

- As the comments already pointed out, one important difference is in the assumption where most of the variance is: on $x$ or on $y$.

- In addition, if the purpose of the model is prediction, you should predict forwards, i.e. in the direction that is modeled. That is, if you want to predict experimental results, model $experiment = f (theory)$.

- On the other hand, if you assume the error is on your theoretical model and you are interested in the relationship between theoretical model and experimental results (remember: experimental science makes the fundamental point that if experiment and theory do not agree, it is theory that needs to change) and you e.g. need to measure the slope between experiment and theory, then model $theory = f (experiment)$

- (In chemical analysis as in measuring concentrations, there are more important characteristics, wrt. to how multivariate measurements are used, and whether all constituents of the system are known - but these are probably not relevant for your problem).

I work with vibrational spectra which are sometimes computed for molecules in vacuum and then a correction factor is applied to account for the difference between vacuum (theory) and solution (reality/experiment). While I may be biased because I'm experimental spectroscopist, I'd say that at least in this situation it is very clear where the major source of error is...

About the DIN: are you referring to DIN 32465 or 38402?

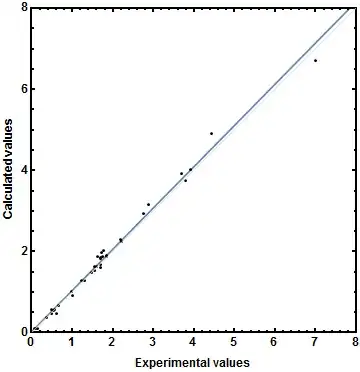

They give formulas that are valid only under the stated assumptions (which are also in chemical analysis very often not met, particularly the variance homogeneity (also your graph looks like increasing variance with increasing values). The consequence is that you cannot use the "shortcut" formulas but have to do the proper calculation. In particular, you'd underestimate the uncertainty for high values and overestimate uncertainty for low values. From a statistics point of view, a weighted regression would be appropriate.

However, partiularly if you need to measure figures of merit such as limit of detection LOD or limit of quantitation LOQ, there are straightforward (though tedious) methods to do this almost without assumptions.