If $X_i$ are iid Normal(0,1), then a sample from it won't have sample mean 0 or sample standard deviation 1 just due to random variation.

Now consider what happens when we do $Z=\frac{X-\overline{X}}{s_X}$

While we do now have sample mean 0 and sample standard deviation 1, what we don't have is $Z$ being normally distributed.

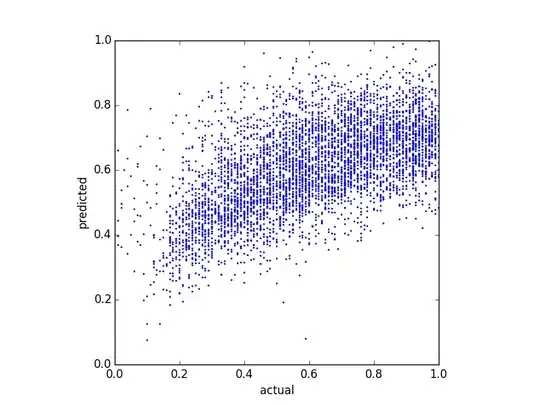

In small to moderate sample sizes, it has short tails, and substantially smaller kurtosis than a standard normal, Indeed from simulation for samples of size n=10 it looks pretty similar to a scaled beta(4,4) (that has been scaled to lie in (-3,3) ):

(The x-axis is a random sample of B(4,4) scaled to (-3,3). Of course this doesn't mean the distribution shape is a beta(4,4). -- Edit: as Henry points out, it is in fact a Beta(4,4).)

The values in res were generated as follows:

res=replicate(100000,scale(rnorm(10)))

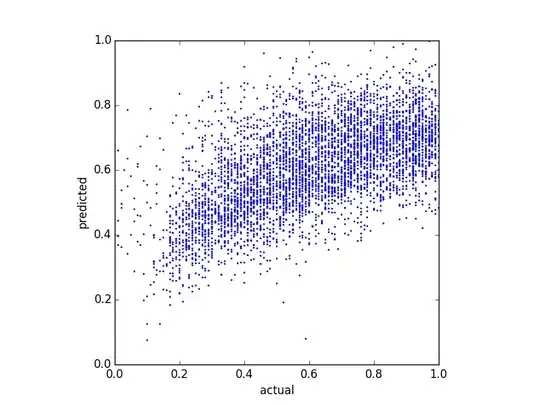

For samples of size 5, the result looks rather like a scaled beta(3/2,3/2).

Further, the values in each sample are no longer independent, since they sum to 0 and their squares sum to $n-1$