I have pairs of pictures of participants making facial expressions (240 pairs per participant, 11 participants). Two raters agreed on a criterion and rated each one of the pairs on a scale from 1 to 6, according to how similar the two expressions within the pair were.

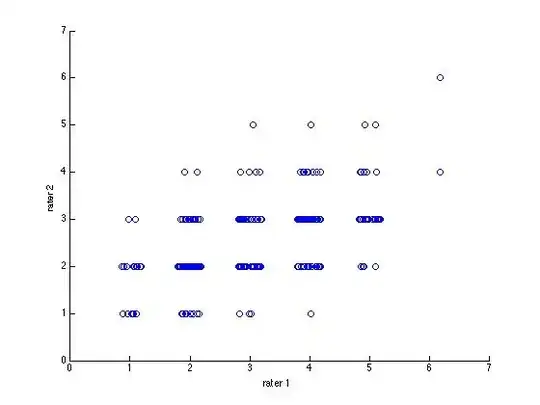

When I calculate the intraclass correlation coefficient for each participant (consistency, not absolute agreement) I get values of around .5. The points do more or less cluster around the diagonal (indicating ok-ish agreement) but it's clearly not great.

Below is an example plot of the ratings for one participant, with jitter on the x axis to show density of points.

Normally, very high inter-rater reliability is necessary to show that the raters are rating something meaningful. However, getting high agreement on a 6-point scale is proving hard.

What is an acceptable ICC in a 6-point Likert scale? Is .5 really low, or the expectable standard?

Note: for reasons of experimental design, I can't change the rating scale.