I recently read the following statistical 'sin' here:

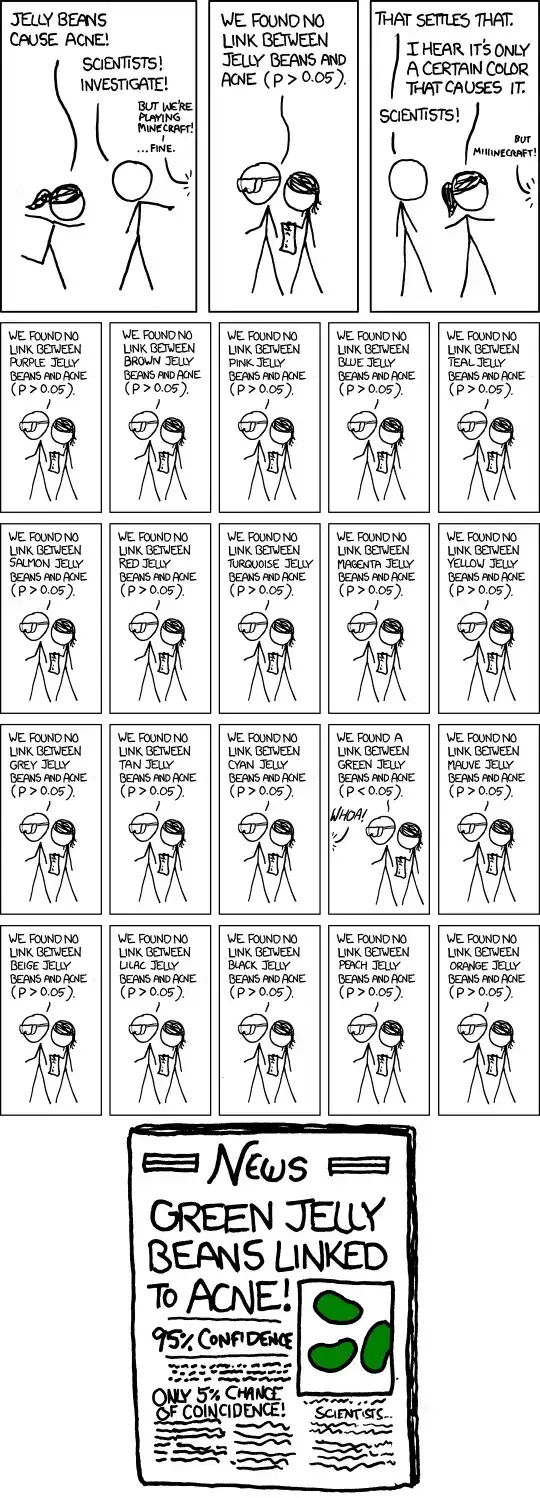

Something I see a surprising amount in conference papers and even journals is making multiple comparisons (e.g. of bivariate correlations) and then reporting all the p<.05 results as "significant" (ignoring the rightness or wrongness of that for the moment).

Can someone explain this 'sin' to me? I have run about 40 correlation tests and all are significant!

(I presume this sin has something to do with the notion that a statistically significant finding may not necessarily be a meaningful finding.)