I understand that neural networks (NNs) can be considered universal approximators to both functions and their derivatives, under certain assumptions (on both the network and the function to approximate). In fact, I have done a number of tests on simple, yet non-trivial functions (e.g., polynomials), and it seems that I can indeed approximate them and their first derivatives well (an example is shown below).

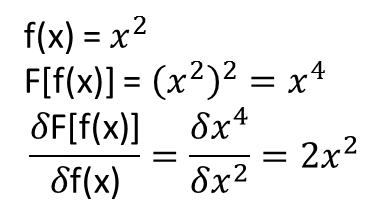

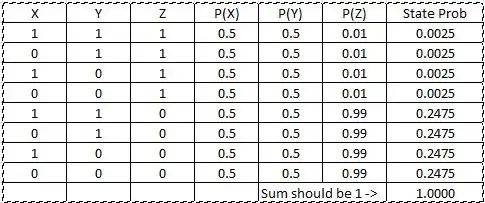

What is not clear to me, however, is whether the theorems that lead to the above extend (or perhaps could be extended) to functionals and their functional derivatives. Consider, for example, the functional: \begin{equation} F[f(x)] = \int_a^b dx ~ f(x) g(x) \end{equation} with the functional derivative: \begin{equation} \frac{\delta F[f(x)]}{\delta f(x)} = g(x) \end{equation} where $f(x)$ depends entirely, and non-trivially, on $g(x)$. Can a NN learn the above mapping and its functional derivative? More specifically, if one discretizes the domain $x$ over $[a,b]$ and provides $f(x)$ (at the discretized points) as input and $F[f(x)]$ as output, can a NN learn this mapping correctly (at least theoretically)? If so, can it also learn the mapping's functional derivative?

I have done a number of tests, and it seems that a NN may indeed learn the mapping $F[f(x)]$, to some extent. However, while the accuracy of this mapping is OK, it is not great; and troubling is that the computed functional derivative is complete garbage (though both of these could be related to issues with training, etc.). An example is shown below.

If a NN is not suitable for learning a functional and its functional derivative, is there another machine learning method that is?

Examples:

(1) The following is an example of approximating a function and its derivative: A NN was trained to learn the function $f(x) = x^3 + x + 0.5$ over the range [-3,2]:

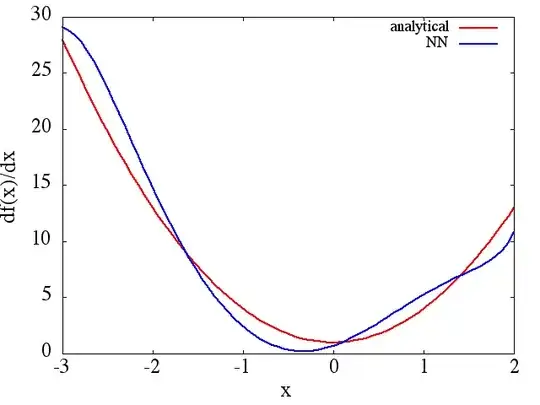

from which a reasonable approximation to $df(x)/dx$ is obtained:

from which a reasonable approximation to $df(x)/dx$ is obtained:

Note that, as expected, the NN approximation to $f(x)$ and its first derivative improve with the number of training points, NN architecture, as better minima are found during training, etc.

Note that, as expected, the NN approximation to $f(x)$ and its first derivative improve with the number of training points, NN architecture, as better minima are found during training, etc.

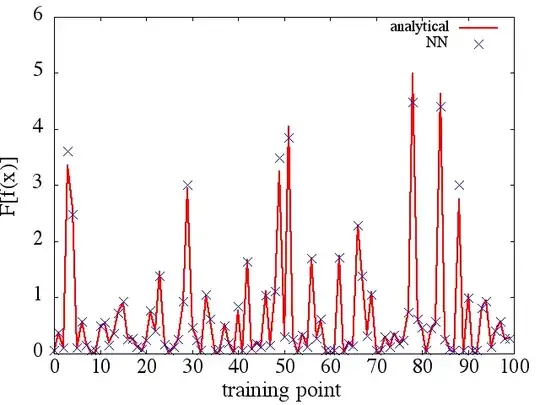

(2) The following is an example of approximating a functional and its functional derivative: A NN was trained to learn the functional $F[f(x)] = \int_1^2 dx ~ f(x)^2$. Training data was obtained using functions of the form $f(x) = a x^b$, where $a$ and $b$ were randomly generated. The following plot illustrates that the NN is indeed able to approximate $F[f(x)]$ quite well:

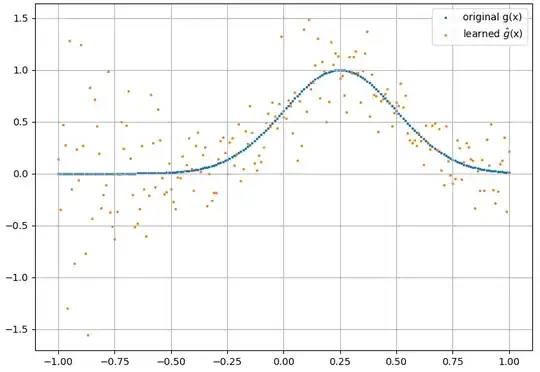

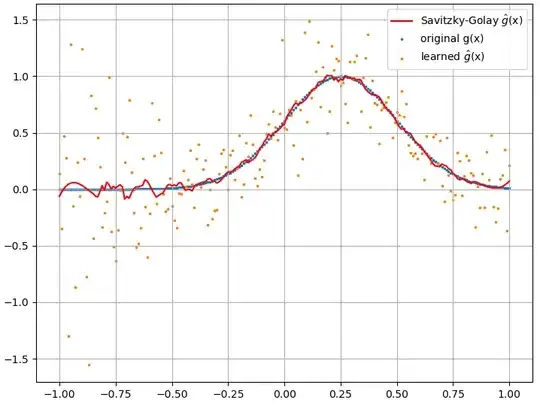

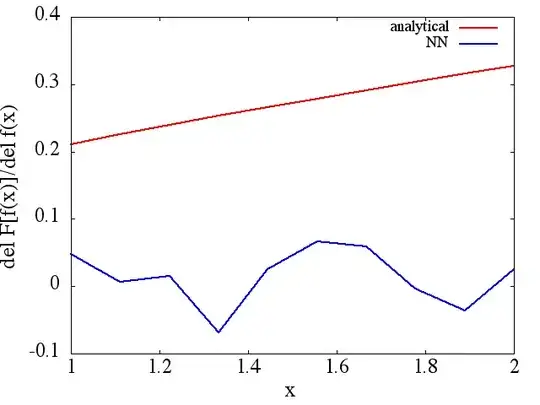

Calculated functional derivatives, however, are complete garbage; an example (for a specific $f(x)$) is shown below:

Calculated functional derivatives, however, are complete garbage; an example (for a specific $f(x)$) is shown below:

As an interesting note, the NN approximation to $F[f(x)]$ seems to improve with the number of training points, etc. (as in example (1)), yet the functional derivative does not.

As an interesting note, the NN approximation to $F[f(x)]$ seems to improve with the number of training points, etc. (as in example (1)), yet the functional derivative does not.