Apologies from the start if my terminology is inaccurate, but what I have is a series of measurements that are made over a short time period from a hardware device to which I have just supplied a stimulus.

The measurements fairly quickly settle around some small range of values, but what I want to do is to stop measuring when I've received enough data points to detect the settling. If you take a look at the following plots, it might be easier to see what I'm trying to say.

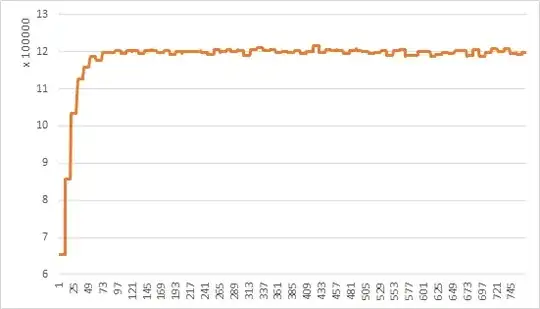

The measurements may be increasing or decreasing from the initial point, and may be relatively free of noise, like these:

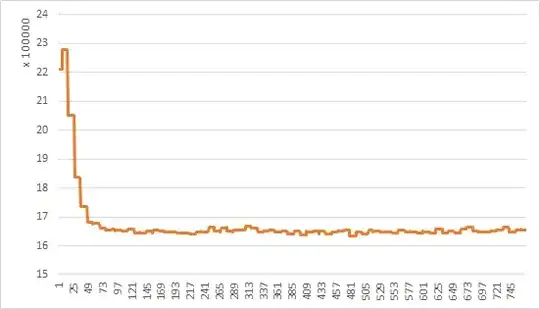

Or, they may be quite noisy, like these:

As you can see, the range of values on the y-axis may differ by an order of magnitude or so.

I don't need to have very high accuracy, but I do need to stop measuring as soon as possible because the elapsed time for taking measurements is high and I'm trying to minimise it.

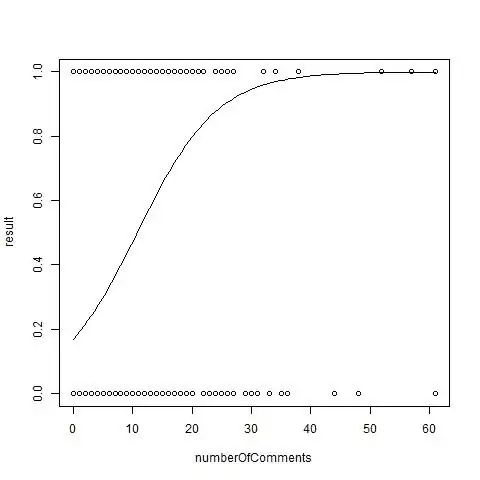

So, what techniques can I use to identify the "corner" point as it arrives? I can see that it might be easier to identify it from a complete sequence like those shown above, but how can I get a good estimate of when to stop measuring?

I hope I've made that clear - please comment if you need any more information and I'll do my best to clarify the question. Also, please re-tag as you see fit - having no idea what to search for, I also have no idea what to tag it with!