Assuming no duplicates exist in the data.

If $n\leq d+1$, the probability is $\text{Pr}=1$.

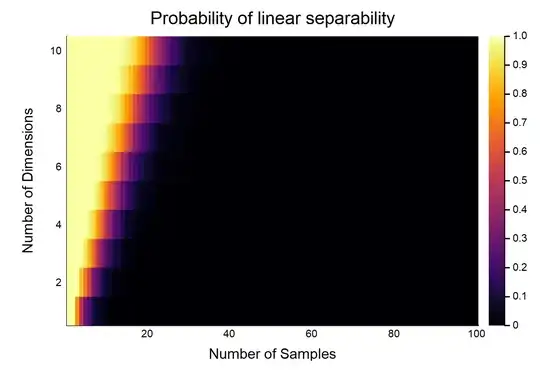

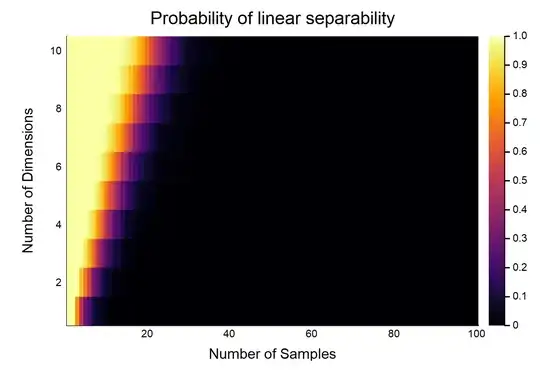

For other combinations of $(n,d)$, see the following plot:

I generated this plot simulating input and output data as specified in the OP. Linear separability was defined as failure of convergence in a logistic regression model, due to the Hauck-Donner effect.

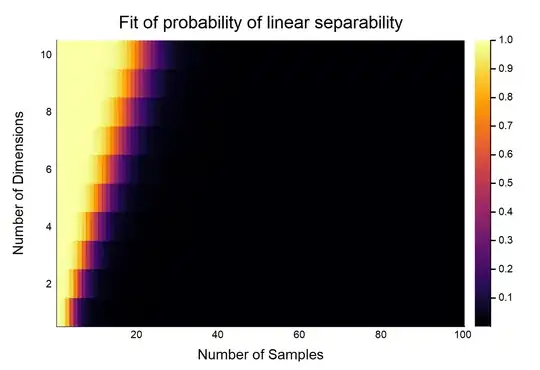

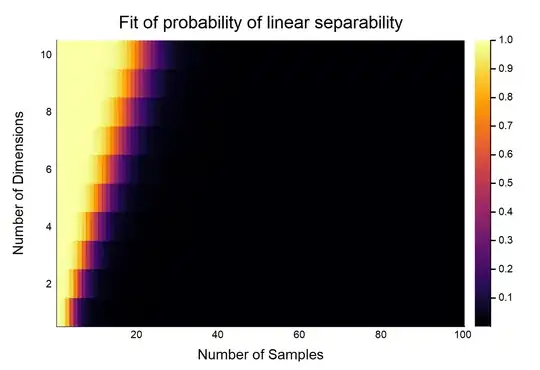

We can see the probability decreases for increasing $n$. In fact, we could fit a model relating $n, d$ to $p$, and this was the result:

$$P(n,d)={

1

\over

{1 + e^

{-(5.82944-4.58261\times n + 1.37271 \times d -0.0235785 \times n \times d)}

}

}$$

Code for the plot (in Julia):

using GLM

ds = 10; #number of dimensions to be investigated

ns = 100 #number of examples to be investigated

niter = 1000; #number of iterations per d per n

P = niter * ones(Int64, ds, ns); #starting the number of successes

for d in 1:ds

for n in (d+1):ns

p = 0 #0 hits

for i in 1:niter

println("Dimensions: $d; Samples: $n; Iteration: $i;")

try #we will try to catch errors in the logistic glm, these are due to perfect separability

X = hcat(rand((n,d)), ones(n)); #sampling from uniform plus intercept

Y = sample(0:1, n) #sampling a binary outcome

glm(X, Y, Binomial(), LogitLink())

catch

p = p+1 #if we catch an error, increase the count

end

end

P[d,n] = p

end

end

using Plots

gui(heatmap(P./niter, xlabel = "Number of Samples", ylabel = "Number of Dimensions", title = "Probability of linear separability"))

Code for the model relating $(n,d)$ to $p$ (in Julia):

probs = P./niter

N = transpose(repmat(1:ns, 1, ds))

D = repmat(1:ds, 1, ns)

fit = glm(hcat(log.(N[:]), D[:], N[:].*D[:], ones(ds*ns)), probs[:], Binomial(), LogitLink())

coef(fit)

#4-element Array{Float64,1}:

# -4.58261

# 1.37271

# -0.0235785

# 5.82944

gui(heatmap(reshape(predict(fit), ds, ns), xlabel = "Number of Samples", ylabel = "Number of Dimensions", title = "Fit of probability of linear separability"))