This question is interesting insofar as it exposes some connections among optimization theory, optimization methods, and statistical methods that any capable user of statistics needs to understand. Although these connections are simple and easily learned, they are subtle and often overlooked.

To summarize some ideas from the comments to other replies, I would like to point out there are at least two ways that "linear regression" can produce non-unique solutions--not just theoretically, but in practice.

Lack of identifiability

The first is when the model is not identifiable. This creates a convex but not strictly convex objective function which has multiple solutions.

Consider, for instance, regressing $z$ against $x$ and $y$ (with an intercept) for the $(x,y,z)$ data $(1,-1,0),(2,-2,-1),(3,-3,-2)$. One solution is $\hat z = 1 + y$. Another is $\hat z = 1-x$. To see that there must be multiple solutions, parameterize the model with three real parameters $(\lambda,\mu,\nu)$ and an error term $\varepsilon$ in the form

$$z = 1+\mu + (\lambda + \nu - 1)x + (\lambda -\nu)y + \varepsilon.$$

The sum of squares of residuals simplifies to

$$\operatorname{SSR} = 3\mu^2 + 24 \mu\nu + 56 \nu^2.$$

(This is a limiting case of objective functions that arise in practice, such as the one discussed at Can the empirical hessian of an M-estimator be indefinite?, where you can read detailed analyses and view plots of the function.)

Because the coefficients of the squares ($3$ and $56$) are positive and the determinant $3\times 56 - (24/2)^2 = 24$ is positive, this is a positive-semidefinite quadratic form in $(\mu,\nu,\lambda)$. It is minimized when $\mu=\nu=0$, but $\lambda$ can have any value whatsoever. Since the objective function $\operatorname{SSR}$ does not depend on $\lambda$, neither does its gradient (or any other derivatives). Therefore, any gradient descent algorithm--if it does not make some arbitrary changes of direction--will set the solution's value of $\lambda$ to whatever the starting value was.

Even when gradient descent is not used, the solution can vary. In R, for instance, there are two easy, equivalent ways to specify this model: as z ~ x + y or z ~ y + x. The first yields $\hat z = 1 - x$ but the second gives $\hat z = 1 + y$.

> x <- 1:3

> y <- -x

> z <- y+1

> lm(z ~ x + y)

Coefficients:

(Intercept) x y

1 -1 NA

> lm(z ~ y + x)

Coefficients:

(Intercept) y x

1 1 NA

(The NA values should be interpreted as zeros, but with a warning that multiple solutions exist. The warning was possible because of preliminary analyses performed in R that are independent of its solution method. A gradient descent method would likely not detect the possibility of multiple solutions, although a good one would warn you of some uncertainty that it had arrived at the optimum.)

Parameter constraints

Strict convexity guarantees a unique global optimum, provided the domain of the parameters is convex. Parameter restrictions can create non-convex domains, leading to multiple global solutions.

A very simple example is afforded by the problem of estimating a "mean" $\mu$ for the data $-1, 1$ subject to the restriction $|\mu| \ge 1/2$. This models a situation that is kind of the opposite of regularization methods like Ridge Regression, the Lasso, or the Elastic Net: it is insisting that a model parameter not become too small. (Various questions have appeared on this site asking how to solve regression problems with such parameter constraints, showing that they do arise in practice.)

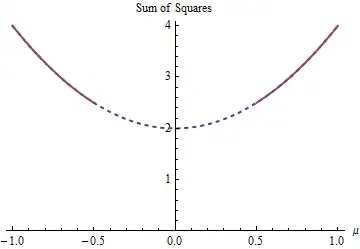

There are two least-squares solutions to this example, both equally good. They are found by minimizing $(1-\mu)^2 + (-1-\mu)^2$ subject to the constraint $|\mu| \ge 1/2$. The two solutions are $\mu=\pm 1/2$. More than one solution can arise because the parameter restriction makes the domain $\mu \in (-\infty, -1/2]\cup [1/2, \infty)$ nonconvex:

The parabola is the graph of a (strictly) convex function. The thick red part is the portion restricted to the domain of $\mu$: it has two lowest points at $\mu=\pm 1/2$, where the sum of squares is $5/2$. The rest of the parabola (shown dotted) is removed by the constraint, thereby eliminating its unique minimum from consideration.

A gradient descent method, unless it were willing to take large jumps, would likely find the "unique" solution $\mu=1/2$ when starting with a positive value and otherwise it would find the "unique" solution $\mu=-1/2$ when starting with a negative value.

The same situation can occur with larger datasets and in higher dimensions (that is, with more regression parameters to fit).