For a cost matrix

$$L= \begin{bmatrix} 0 & 0.5 \\ 1 & 0 \end{bmatrix} \begin{matrix} c_1 \\ c_2 \end{matrix} \;\text{prediction} \\ \hspace{-1.9cm} \begin{matrix} c_1 & c_2 \end{matrix} \\ \hspace{-1.9cm}\text{truth}$$

the loss of predicting class $c_1$ when the truth is class $c_2$ is $L_{12} = 0.5$, and the cost of predicting class $c_2$ when the truth is class $c_1$ is $L_{21} = 1$. There is no cost for correct predictions, $L_{11} = L_{22} = 0$. The conditional risk $R$ for predicting either class $k$ is then

$$

\begin{align}

R(c_1|x) &= L_{11} \Pr (c_1|x) + L_{12} \Pr (c_2|x) = L_{12} \Pr (c_2|x) \\

R(c_2|x) &= L_{22} \Pr (c_2|x) + L_{21} \Pr (c_1|x) = L_{21} \Pr (c_1|x)

\end{align}

$$

For a reference see these notes on page 15.

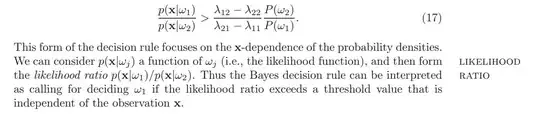

In order to minimize the risk/loss you predict $c_1$ if the cost from the mistake of doing so (that's the loss of the wrong prediction times the posterior probability that the prediction is wrong $L_{12} \Pr (c_2|x)$) is smaller than the cost of wrongfully predicting the alternative,

$$

\begin{align}

L_{12} \Pr (c_2|x) &< L_{21} \Pr (c_1|x) \\

L_{12} \Pr (x|c_2) \Pr (c_2) &< L_{21} \Pr (x|c_1) \Pr (c_1) \\

\frac{L_{12} \Pr (c_2)}{L_{21} \Pr (c_1)} &< \frac{\Pr (x|c_1)}{ \Pr (x|c_2)}

\end{align}

$$

where the second line uses Bayes' rule $\Pr (c_2|x) \propto \Pr (x|c_2) \Pr (c_2)$. Given equal prior probabilities $\Pr (c_1) = \Pr (c_2) = 0.5$ you get

$$\frac{1}{2} < \frac{\Pr (x|c_1)}{ \Pr (x|c_2)}$$

so you choose to classify an observation as $c_1$ is the likelihood ratio exceeds this threshold. Now it is not clear to me whether you wanted to know the "best threshold" in terms of the likelihood ratios or in terms of the attribute $x$. The answer changes according to the cost function. Using the Gaussian in the inequality with $\sigma_1 = \sigma_2 = \sigma$ and $\mu_1 = 0$, $\mu_2 = 1$,

$$

\begin{align}

\frac{1}{2} &< \frac{\frac{1}{\sqrt{2\pi}\sigma}\exp \left[ -\frac{1}{2\sigma^2}(x-\mu_1)^2 \right]}{\frac{1}{\sqrt{2\pi}\sigma}\exp \left[ -\frac{1}{2\sigma^2}(x-\mu_2)^2 \right]} \\

\log \left(\frac{1}{2}\right) &< \log \left(\frac{1}{\sqrt{2\pi}\sigma}\right) -\frac{1}{2\sigma^2}(x-0)^2 - \left[ \log \left(\frac{1}{\sqrt{2\pi}\sigma}\right) -\frac{1}{2\sigma^2}(x-1)^2 \right] \\

\log \left(\frac{1}{2}\right) &< -\frac{x^2}{2\sigma^2} + \frac{x^2}{2\sigma^2} - \frac{2x}{2\sigma^2} + \frac{1}{2\sigma^2} \\

\frac{x}{\sigma^2} &< \frac{1}{2\sigma^2} - \log \left(\frac{1}{2}\right) \\

x &< \frac{1}{2} - \log \left(\frac{1}{2}\right) \sigma^2

\end{align}

$$

so a prediction threshold in terms of $x$ as you search for can only be achieved if the losses from false predictions are the same, i.e. $L_{12} = L_{21}$ because only then can you have $\log \left( \frac{L_{12}}{L_{21}} \right) = \log (1) = 0$ and you get the $x_0 < \frac{1}{2}$.