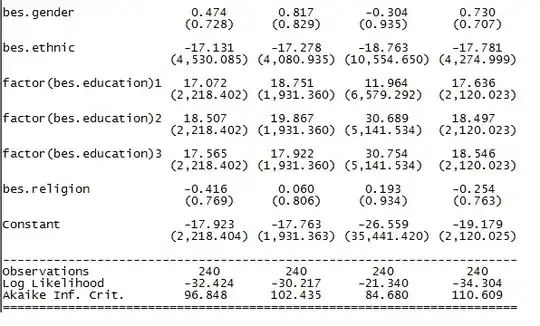

Below you will see a screen grab off the tail of my results, where you get to my control variables. In short, I ran 4 competing models to explain a phenomenon.

The Dataset was originally very large - however, in order to make the AIC and BIC comparable to one another, I decided to put them all into a data frame using the Complete.cases function. There The result is that the sample size is vastly reduced to 240 and many coefficients and Standard Errors are large.

Actually, that's an understatement - the standard errors are very large indeed. Sorry to ask a simple question but do I have problems here?

Henry

Combinedframe <- data.frame(bes$influence,bes$groupbenefits,bes$satisfaction,bes$guilty,bes$civicduty,bes$democracy,bes$personalbenefits,bes$toobusy,bes$age,bes$gender,bes$ethnic,bes$religion,bes$education,bes$labourcrime,bes$laboureducation,bes$labourimmigration,bes$labournhs,bes$labourterrorism,bes$labourecon,bes$Govfair,bes$minor,bes$demosat,bes$persretro,bes$natretro,bes$perspro,bes$natpro,bes$interest,bes$attention,bes$newspaper,bes$contacted,bes$asked,bes$know1,bes$know2,bes$know3,bes$know4,bes$know5,bes$know6,bes$know7,bes$know8,bes$vote,bes$realchoice,bes$goodcand,bes$sayvsdo,bes$brown,bes$cameron,bes$clegg,bes$browncomp,bes$cameroncomp,bes$cleggcomp,bes$attachment,bes$fairelections,bes$trustwestminster,bes$trustparties)

Combined <- Combinedframe[complete.cases(Combinedframe),]

View(Combined)

#################COMBINED DATA FRAME MODELS####################

Model1b <- glm(bes.vote~ bes.influence+bes.groupbenefits+bes.satisfaction+bes.guilty+bes.civicduty+bes.democracy+bes.personalbenefits+bes.toobusy+bes.age+bes.gender+bes.ethnic+factor(bes.education)+bes.religion, data=Combined, family=binomial)

summary(Model1b)

Model2b <- glm(bes.vote~ bes.labourcrime+bes.laboureducation+bes.labourimmigration+bes.labournhs+bes.labourterrorism+bes.labourecon+bes.Govfair+bes.minor+bes.demosat+bes.persretro+bes.natretro+bes.perspro+bes.natpro+bes.gender+bes.age+bes.ethnic+factor(bes.education)+bes.religion, data= Combined, family=binomial)

summary(Model2b)

Model3b <- glm(bes.vote~ bes.interest+bes.attention+bes.newspaper+bes.contacted+bes.asked+bes.know1+bes.know2+bes.know3+bes.know4+bes.know5+bes.know6+bes.know7+bes.know8+bes.age+bes.gender+bes.ethnic+factor(bes.education)+bes.religion, data= Combined, family=binomial)

summary(Model3b)