I have a dependent variable that can range from 0 to infinity, with 0s actually being correct observations. I understand censoring and Tobit models only apply when the actual value of $Y$ is partially unknown or missing, in which case data is said to be truncated. Some more information on censored data in this thread.

But here 0 is a true value that belongs to the population. Running OLS on this data has the particular annoying problem to carry negative estimates. How should I model $Y$?

> summary(data$Y)

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.00 0.00 0.00 7.66 5.20 193.00

> summary(predict(m))

Min. 1st Qu. Median Mean 3rd Qu. Max.

-4.46 2.01 4.10 7.66 7.82 240.00

> sum(predict(m) < 0) / length(data$Y)

[1] 0.0972098

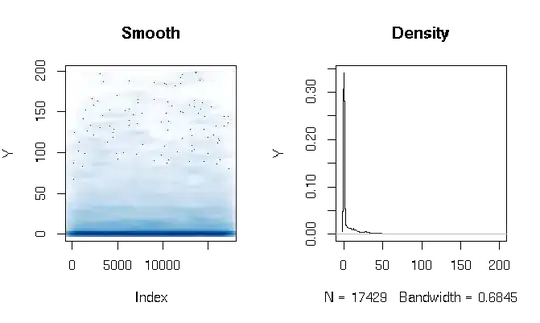

Developments

After reading the answers, I'm reporting the fit of a Gamma hurdle model using slightly different estimate functions. The results are quite surprising to me. First let's look at the DV. What is apparent is the extremely fat tailed data. This has some interesting consequences on the evaluation of the fit that I will comment on below:

quantile(d$Y, probs=seq(0, 1, 0.1))

0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100%

0.000000 0.000000 0.000000 0.000000 0.000000 0.000000 0.286533 3.566165 11.764706 27.286630 198.184818

I built the Gamma hurdle model as follows:

d$zero_one = (d$Y > 0)

logit = glm(zero_one ~ X1*log(X2) + X1*X3, data=d, family=binomial(link = logit))

gamma = glm(Y ~ X1*log(X2) + X1*X3, data=subset(d, Y>0), family=Gamma(link = log))

Finally I evaluated the in-sample fit using three different techniques:

# logit probability * gamma estimate

predict1 = function(m_logit, m_gamma, data)

{

prob = predict(m_logit, newdata=data, type="response")

Yhat = predict(m_gamma, newdata=data, type="response")

return(prob*Yhat)

}

# if logit probability < 0.5 then 0, else logit prob * gamma estimate

predict2 = function(m_logit, m_gamma, data)

{

prob = predict(m_logit, newdata=data, type="response")

Yhat = predict(m_gamma, newdata=data, type="response")

return(ifelse(prob<0.5, 0, prob)*Yhat)

}

# if logit probability < 0.5 then 0, else gamma estimate

predict3 = function(m_logit, m_gamma, data)

{

prob = predict(m_logit, newdata=data, type="response")

Yhat = predict(m_gamma, newdata=data, type="response")

return(ifelse(prob<0.5, 0, Yhat))

}

At first I was evaluating the fit by the usual measures: AIC, null deviance, mean absolute error, etc. But looking at the quantile absolute errors of the above functions highlight some issues related to the high probability of a 0 outcome and the $Y$ extreme fat tail. Of course, the error grows exponentially with higher values of Y (there is also a very large Y value at Max), but what is more interesting is that relying heavily on the logit model to estimate 0s produce a better distribution fit (I wouldn't know how to better describe this phenomena):

quantile(abs(d$Y - predict1(logit, gamma, d)), probs=seq(0, 1, 0.1))

0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100%

0.00320459 1.45525439 2.15327192 2.72230527 3.28279766 4.07428682 5.36259988 7.82389110 12.46936416 22.90710769 1015.46203281

quantile(abs(d$Y - predict2(logit, gamma, d)), probs=seq(0, 1, 0.1))

0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100%

0.000000 0.000000 0.000000 0.000000 0.000000 0.309598 3.903533 8.195128 13.260107 24.691358 1015.462033

quantile(abs(d$Y - predict3(logit, gamma, d)), probs=seq(0, 1, 0.1))

0% 10% 20% 30% 40% 50% 60% 70% 80% 90% 100%

0.000000 0.000000 0.000000 0.000000 0.000000 0.307692 3.557285 9.039548 16.036379 28.863912 1169.321773